Why Data Quality Is Important

Data quality is fundamental to all operational and analytics endeavors. Think about how much data drives any set of business decisions, the variety of that data and how it is pulled together. As organizations increasingly rely on many different pieces of information to understand business performance, the quality of the data used is critical to knowing how to steer ongoing and new initiatives.

Data comes in many different forms—acquired, legacy, scheduled, intermittent, structured data, unstructured data, etc. This makes monitoring its quality increasingly more challenging. It may have been the case that running statistical profiles of data would clearly suggest the best ways to fix it, but with more sources of data, more operational reference data and more data types (online, real-time statistics, text, images, etc.) this is no longer a straightforward process.

High quality data is trusted data. It is consistent and unambiguous. For data to be trusted it must be defined and monitored as it travels from its initial creation or collection, through to its ultimate end—archival or deletion. Poor quality data, given the increasing number of data sources and the increasing complexity of analytic capabilities, can become a serious liability to the organization. Sharing poor quality data can lead to costly business decisions based on incomplete or inaccurate information. Utilizing poor quality data can lead to regulatory liabilities, legal risk and significant costs in productivity.

Poor data quality destroys business value. Recent Gartner research has found that organizations believe poor data quality to be responsible for an average of $15 million per year in losses. A frequently cited estimate originating from IBM suggests the yearly cost of data quality issues in the U.S. during 2016 alone was about $3.1 trillion. Lack of trust by business managers in data quality is commonly cited among chief impediments to decision-making.

There are several well-known dimensions of data quality:

- Accuracy

- Completeness

- Consistency/Integrity

- Uniqueness

- Timeliness

- Relevance

Unfortunately, it is a huge challenge to monitor and manage each of these dimensions for all the data in use. Iteratively checking data quality as data is integrated and transformed throughout the data lifecycle is a very complex task. This is the reason we promote establishing a dedicated data quality monitoring and management process, as well as dedicated data quality staff and rules for best practices.

Given not only the complexity of data quality management, but also the importance of having trusted data, organizations need to keep this activity top-of-mind as they invest in data management tools. Keep in mind that data quality underlies the success of ALL your data initiatives: Data Governance, Next Generation Analytics, Customer 360 and Data Lake journeys. Investing in data quality infrastructure will manifest a very effective way to maximize the productivity of all end-users of your data, since having trusted data will avoid wasted time in locating, validating and fixing (usually inconsistently or in silos) data used for reports and analyses.

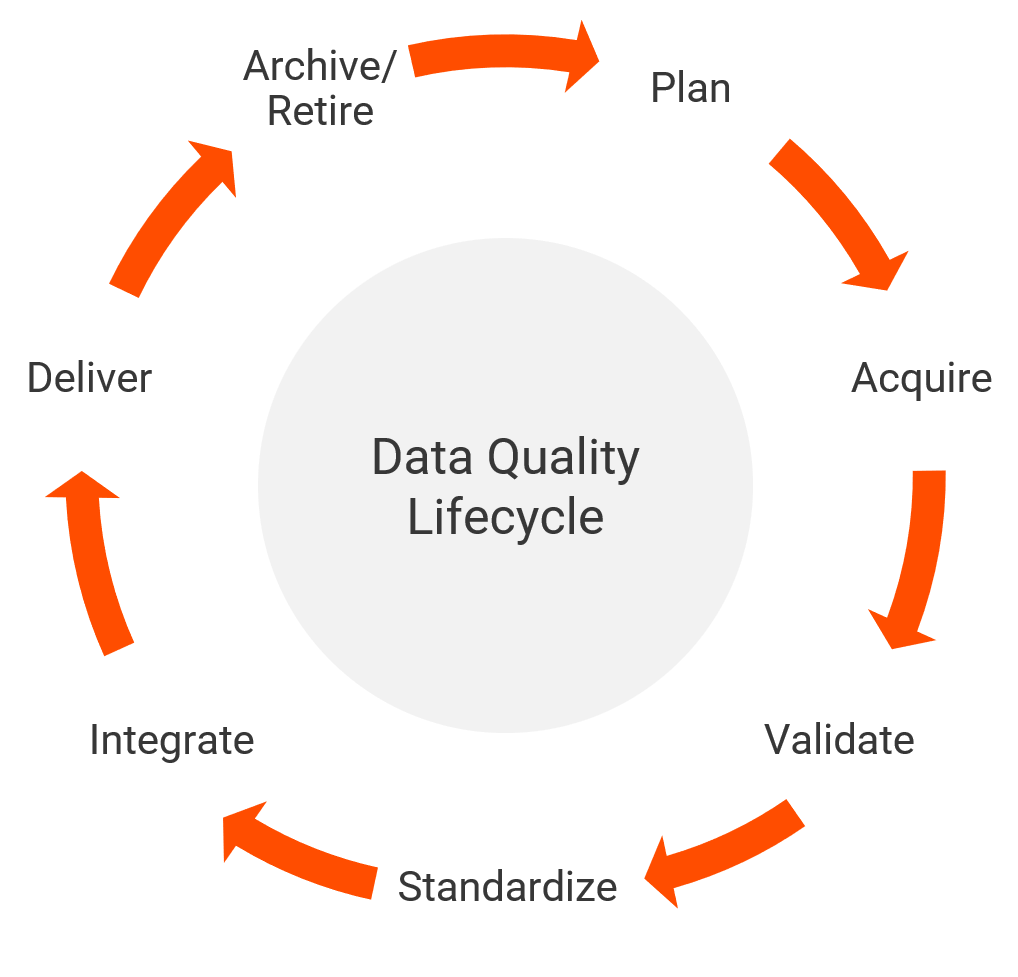

Approach to Data Quality Management: The Data Lifecycle

It is important to consider not only what data is collected but also how it travels and is managed throughout its duration. Data is acquired or created for business use either from discreet transactions or to build a profile of a business entity (e.g. master data—Customer, Vendor, Provider). As it is ‘ingested’, it needs to be evaluated for quality and usefulness—is it fit for business purpose? Does it enhance our understanding of what has taken place or will take place in the future?

There are many costs associated with acquiring/creating data once it has been ingested: validating its quality, cleansing, standardizing, integrating, maintaining, developing business rules for sharing, protecting its distribution and finally purging or archiving it to maintain relevant data.

At each step of this progression, it is advisable that checkpoints be established to ensure that the health of the data is preserved. Here are some of the activities to perform at each point.

- Plan—define business requirements, data requirements, functional requirements

- Acquire—establish channels for transmission, data sources and applications

- Validate—determine acceptance criteria, thresholds, references, mechanisms

- Standardize—apply business and data rules, references, matching rules

- Integrate—determine integration rules, documentation of sources and targets, scheduling

- Deliver—enforce security and access rules and rights, governance policies, delivery formats

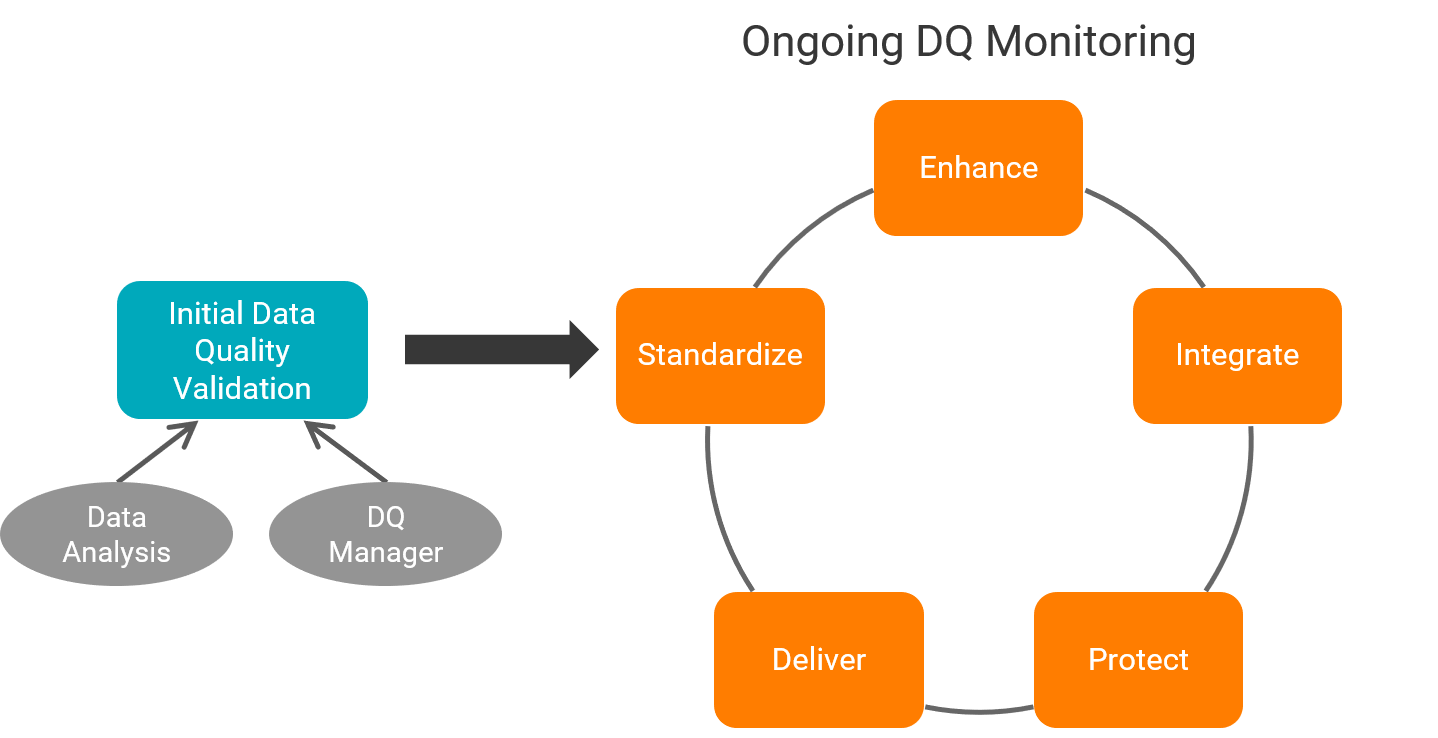

It is also a ‘best practice’ to develop an ongoing data quality measurement and monitoring plan so that the mechanisms and rules you have established for the stages of the data lifecycle are continuously assessed. Opportunities for remedying data quality events need to be tackled quickly and efficiently so they don’t manifest themselves too late (e.g., in business reports that don’t justify).

Clear roles and accountabilities need to be established so that individuals understand where they will engage in this entire process. Organizations should assess how data quality is being addressed currently, how many different people are ‘fixing’ data and where in the data lifecycle. To the extent possible, this needs to be simplified and standardized so that documented rules are applied consistently by those responsible for data quality. This will be different for each organization, and it will need to be designed incrementally with the end-goal in mind. Data quality is simply too important to approach haphazardly.

Data Quality Use Cases

Use Case #1—Customer Satisfaction

Engaging your customers is vital to driving your business. Customer satisfaction is derived from the feedback you provide your customers on how accurately you have identified them, what their preferences are and their history of interactions with your organization. If you are a bank, you need to understand what types of different accounts a customer has, the frequency and size of transactions, even the types of investments your clients have made as they relate to retirement planning or lifestyle decisions. If you are an insurance company, knowing the types of policies and coverage your client has could help shape the types of conversations you have with them, what you can position and sell. Data quality can help you improve the accuracy of your customer records by verifying and enriching the information you already have. And beyond contact info, you can manage customer interactions by storing additional customer preferences such as time of day they visit your site and which content they are most interested in.

There are many different types of ‘customers.’ Think broadly about who purchases your products or services. These could include students at a university who pay for classes and professors, or online training. They may include healthcare providers who rely on accurate data on patients to provide safe and effective care. They could also, in the more traditional sense be customers who buy your products.

The more customer information you have, the better you can understand your customers and achieve “Customer 360,” a full-view of your customer. But you need to be aware that more data means more complexity – increasing the need for high quality data from each source and managing that data throughout the data lifecycle.

Use Case #2—Healthcare Safety

The quality of data in a healthcare environment can be a matter of life, death or impairment. As a patient, there may be several areas where personal information is collected: ambulance, ER, primary care doctors office, admitting, billing, etc. This can present many opportunities for errors or inconsistencies, and as data is accumulated, it needs to be verified for consistency and accuracy. This needn’t necessarily be done in person—there are excellent tools available to compare and fix this type of customer information.

Of even more importance is the data collected on patients’ symptoms, vitals and medications. A truly frightening scenario illustrates the critical nature of data quality. A diabetic infant who is admitted receives a dose of insulin that has been prepared for an adult who weighs 180 lbs. as opposed to 81 kilos. To prevent what could be a lethal injection, there need to be thresholds and rules developed—in this case as part of an algorithm that takes age, weight, height and blood glucose levels—to determine an appropriate dosage range.

Development of these types of rules will be built into the data quality measurement and monitoring plan and customized for the business case the data informs. It is ultimately the business scenario that determines what the validation and monitoring rules will look like and will shape the checks and balances applied to data at different points.

The Journey Toward DQ Modernization

As organizations pursue the journeys towards Next-Generation Analytics, Data Governance and Compliance, Data Lake and Customer 360, monitoring and managing data quality will be critical to success. However, the data quality journey itself is large and can be intimidating. The best way to get started is to keep your initial efforts tight and focused. Don’t boil the ocean and set unrealistic expectations. Deliberately scope your efforts to incrementally build the measurement and management processes around a defined set of data, prove the process and then scale it up with additional data sources. Make sure that the staff resources are committed and dedicated to data quality activities. This will relieve data analysts and business end-users of the chore of checking and fixing poor quality data time and time again.

Success with data quality will lessen reliance on haphazard ‘fixes’ of the data, sometimes resulting in wrong results and misleading information. It will drive more efficient operations, increase staff productivity and improve confidence and trust in data. Only curated data will be available for reports and self-service, reducing the churn of looking for data, massaging it and then trying to interpret the results.

Carefully consider the set of tools that can support your data quality management initiatives. Informatica Data Quality (IDQ) when used in conjunction with Power Center, Axon data governance and MDM will rapidly provide the capabilities you need along your data modernization journey.

Table of Contents

RESOURCES

Data Governance & Privacy

PLAN

IMPLEMENT

MONITOR

OPTIMIZE