-

Success

Manage your Success Plans and Engagements, gain key insights into your implementation journey, and collaborate with your CSMsSuccessAccelerate your Purchase to Value engaging with Informatica Architects for Customer SuccessAll your Engagements at one place

-

Communities

A collaborative platform to connect and grow with like-minded Informaticans across the globeCommunitiesConnect and collaborate with Informatica experts and championsHave a question? Start a Discussion and get immediate answers you are looking forCustomer-organized groups that meet online and in-person. Join today to network, share ideas, and get tips on how to get the most out of Informatica

-

Knowledge Center

Troubleshooting documents, product guides, how to videos, best practices, and moreKnowledge CenterOne-stop self-service portal for solutions, FAQs, Whitepapers, How Tos, Videos, and moreVideo channel for step-by-step instructions to use our products, best practices, troubleshooting tips, and much moreInformation library of the latest product documentsBest practices and use cases from the Implementation team

-

Learn

Rich resources to help you leverage full capabilities of our productsLearnRole-based training programs for the best ROIGet certified on Informatica products. Free, Foundation, or ProfessionalFree and unlimited modules based on your expertise level and journeySelf-guided, intuitive experience platform for outcome-focused product capabilities and use cases

-

Resources

Library of content to help you leverage the best of Informatica productsResourcesMost popular webinars on product architecture, best practices, and moreProduct Availability Matrix statements of Informatica productsMonthly support newsletterInformatica Support Guide and Statements, Quick Start Guides, and Cloud Product Description ScheduleEnd of Life statements of Informatica products

- Velocity

- Strategy

-

Solutions

-

Stages

Following a rigorous methodology is key to delivering customer satisfaction and expanding analytics use cases across the business.

-

More

-

Success

Manage your Success Plans and Engagements, gain key insights into your implementation journey, and collaborate with your CSMsAccelerate your Purchase to Value engaging with Informatica Architects for Customer SuccessAll your Engagements at one place

-

Communities

A collaborative platform to connect and grow with like-minded Informaticans across the globeConnect and collaborate with Informatica experts and championsHave a question? Start a Discussion and get immediate answers you are looking forCustomer-organized groups that meet online and in-person. Join today to network, share ideas, and get tips on how to get the most out of Informatica

-

Knowledge Center

Troubleshooting documents, product guides, how to videos, best practices, and moreOne-stop self-service portal for solutions, FAQs, Whitepapers, How Tos, Videos, and moreVideo channel for step-by-step instructions to use our products, best practices, troubleshooting tips, and much moreInformation library of the latest product documentsBest practices and use cases from the Implementation team

-

Learn

Rich resources to help you leverage full capabilities of our productsRole-based training programs for the best ROIGet certified on Informatica products. Free, Foundation, or ProfessionalFree and unlimited modules based on your expertise level and journeySelf-guided, intuitive experience platform for outcome-focused product capabilities and use cases

-

Resources

Library of content to help you leverage the best of Informatica productsMost popular webinars on product architecture, best practices, and moreProduct Availability Matrix statements of Informatica productsMonthly support newsletterInformatica Support Guide and Statements, Quick Start Guides, and Cloud Product Description ScheduleEnd of Life statements of Informatica products

-

Success

Operationalization of Cloud Data Lakes

Cloud Data Warehouse & Data Lake

Data Lake - Overview

A Data Lake is a central repository that allows you to store all your data—structured and unstructured—in volume. Data typically is stored in a raw format (i.e., as is) without being transformed. From there it can be scrubbed and optimized for the purpose at hand, be it dashboards for interactive analytics, downstream machine learning, or analytics applications. Ultimately, the Data lake enables data teams to work collectively on the same information, which can be curated and secured for the right team or operation

Challenges in Operationalizing Data Lakes

- Resources and expertise availability and cost - Operationalization of a data lake should consist of tools providing self service capabilities to limit the need for highly skilled resources and costs associated with development and maintenance of the data lake

- Finding Data - Finding data required in the source systems along with the lineage between the systems

- Data Governance - Mapping the data in the data sources to a standard Business Glossary and defining data governance and data privacy rules

- Connectivity to Data Sources - Connecting to several data sources both structured and unstructured which are on premise, on cloud, Big data related, application related, streaming related etc. Hand coding to connect to these data sources is also a challenge

- Scalability and hand coding - Scaling up and down to provide enough to power to process the data but also reduce the cost when the data ingestion is completed. Hand coding of the data ingestion processes are hard to maintain

- Providing trusted data - Providing trust in the data being ingested into the data is a challenge and is key to the success of the data lake

- Operational Insights - Providing operational insights as the data is populated is very crucial but challenging with the data ingestion processes

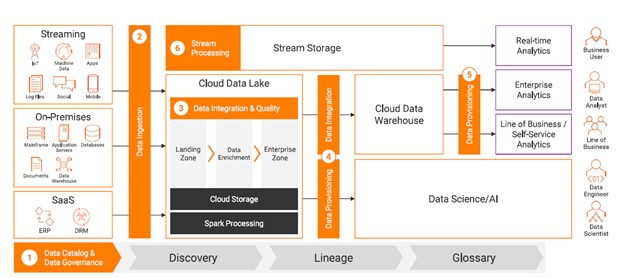

Informatica Architecture for Cloud Data Lake Solution

Data Catalog & Data Governance

Data Discovery using Enterprise Data Catalog (EDC) provides below capabilities:

- Centralized metadata availability from all data assets

- Self Service data discovery search capabilities for everyone in the organization as needed

- Automatic data profiling capabilities for analysis

- Automatic end to end lineage diagrams

- Discover data relationships

- Automatic association of business glossary association

- Data certifications

- Reviews and ratings of data assets

Data Ingestion

Data Integration/Ingestion using Informatica Intelligent cloud services (IICS) for Mass Ingestion and Data Integration (CDI) provides below capabilities:

- Broad Connectivity

- Mass Ingestion for files, Database and Streaming sources

- Codeless integration

- Push-down optimization

- Serverless and elastic scaling

- Spark-based processing in the cloud

- Stream Processing

- Operational Insights

- Machine learning capabilities

Data Integration and Quality

Data curation using IICS Cloud Data Quality (CDQ) provides below capabilities

- Data profiling

- Data quality rules and auto generation of quality metrics

- Dictionaries to manage value lists

- cleaning, standardization, parsing, verification and deduplication and consolidation processes

- Integrated with data governance and data integration pipelines

Data Provisioning

Data Preparation using Informatica cloud services provides the below capabilities:

- Discover, Search and explore data using lineage and relationships

- import data into data lake

- upload files into data lake

- Provide data access capabilities for reporting

Data Streaming

Data streaming using IICS Data Streaming (CDS):

- Versatile connectivity to variety of sources and source protocols (MQTT, OPC, HTTP, etc.)

- Edge processing to filter or handle bad records and add metadata to the message for better analytics

- Schema drift to support intent driven ingestion and support for dynamic evolving schema

- Stream analytics to support event time-based processing and support for late arrival of IOT events

- Serverless & ML providing real time enrichment with transformation, serverless streaming and real time ML model operationalization

Data Lake Architectures

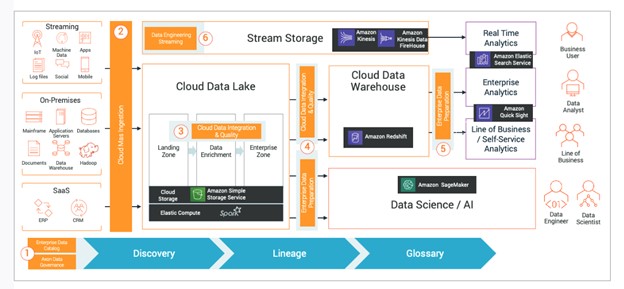

Blueprint for a Successful Cloud Data Warehouse, Data Lake, or Data Lakehouse on AWS

AWS Components part of Data Lake architecture:

- AWS S3: Data from disparate sources is staged in Data Lake landing zone on AWS S3, data from landing zone is then curated and enriched and loaded into the Enterprise zone on AWS S3 for further consumption from data lake.

- AWS RedShift: Cloud Data Warehouse is created on AWS RedShift to provide data for analytics. This enables data users to acquire new insights for businesses and customers.

- AWS EC2: Amazon EC2’s simple web service interface allows to obtain and configure capacity with minimal friction. It provides complete control of computing resources and runs on Amazon’s proven computing environment.

- AWS Kinesis and FireHose: Amazon Kinesis and Amazon Kinesis FireHose provides capabilities to consume real time data into the Data Lake and provide data streaming for real time analytics. This service automatically scales to match the throughput of the data ingesting into the data lake

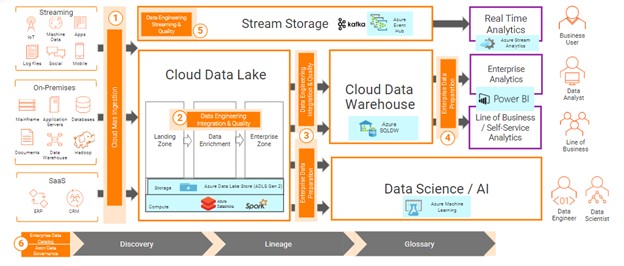

Blueprint for a Successful Cloud Data Warehouse, Data Lake, or Data Lakehouse on Azure

Azure Components part of Data Lake architecture:

- Azure Data Lake Store (ADLS Gen 2): Data from disparate sources is staged in Data Lake landing zone on Azure ADLS Gen2, data from landing zone is then curated and enriched and loaded into the Enterprise zone on Azure ADLS Gen2. Data Lake Storage Gen2 is very cost effective utilizing low-cost Azure Blob storage.

- Azure Synapse: Data structures are created on Azure Synapse for further consumption using SQL like format. Azure Synapse is an analytics service that brings together enterprise Data Warehousing and Big Data analytics.

- Kafka on Azure and Events Hub: Kafka on Azure and Azure event Hub is used to consume data into the Data Lake and provide data streaming for real time analytics. Azure Events Hub can receive and process millions of events per second. Data sent to an event hub can be transformed and stored by using any real-time analytics provider or batching/storage adapters.

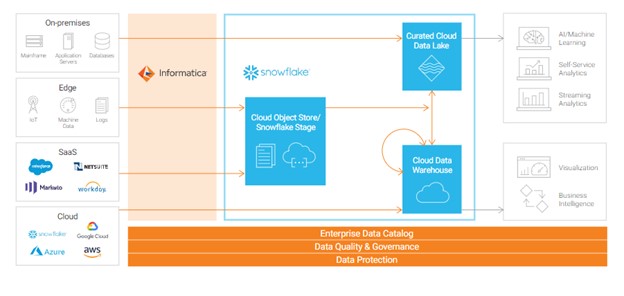

Blueprint for a Successful Cloud Data Warehouse, Data Lake, or Data Lakehouse on Snowflake

Together Informatica cloud services and Snowflake offer a unified data architecture that delivers best-of-breed cloud data warehouse, cloud data management, and data lake capabilities—and runs on any cloud by harnessing the power of Snowflake’s Cloud Data Platform

Snowflake was built for the cloud with a brand-new architecture: multi-cluster, shared data. It is designed to handle any data volume at blazing speed and virtually unlimited scale. With Snowflake, data can be stored, transformed, and analyzed structured and semi-structured data together. Any number of independent virtual compute clusters can be spin-up to support multiple concurrent use cases. Snowflake’s data architecture also features an easy to use services layer that helps you manage your data and users effortlessly, with built in security, encryption, data protection, high availability and available as a pay as you go model

Informatica Intelligent Cloud Services Accelerator for Snowflake:

Informatica Intelligent Cloud Services Accelerator for Snowflake is available via Snowflake Partner Connect valid for Snowflake customers, this free offer includes:

- Start for free—load up to one billion rows per month of data to Snowflake

- Accelerate data migration and break down enterprise data silos with pre-built connectivity to cloud and on-premises data sources

- Build and run basic to advanced integrations with pre-built templates and reusable mappings—no coding needed

- Experience high-powered, high-performance transfer of databases, cloud applications, data warehouses, and files securely at scale

- IICS Accelerator for Snowflake includes pre-built connectors that support any data type (structured, unstructured, or complex) and any data pattern (ETL, ELT). It also provides advanced capabilities like push-down optimization (converts data pipelines to Snowflake queries for in-database data processing), change data capture, advanced lookups, partitioning, and error handling. 4 of the 20 most-popular connectors for Snowflake can be selected.

Informatica Mass ingestion service:

Informatica Mass ingestion service provides streamlining of the high-performance transfer of enterprise data assets in file format, securely and at scale, from on-premises and cloud sources to Snowflake and transfer any size or supported type of file with high performance and scalability.

Informatica Enterprise data catalog, Cloud Data Integration and Cloud Data Quality are also supported on snowflake data warehouse for data discovery and data curation.