Data Lakes operationalization ensures that the data lake achieves its business goals. When data operationalization is mature, businesses achieve a faster time to market, improved performance, and an increase in efficiencies. Signs of mature operationalization are automation of security, metadata generation and data that is easy for different types of users to find, access, and share.

Organizations will realize the return on their investment in the data lake once it supports improved operations, new products and services, and enhanced customer experiences. Reaching these data-driven improvements is a struggle and finding the right framework and operationalization model will lead to significant value for the business

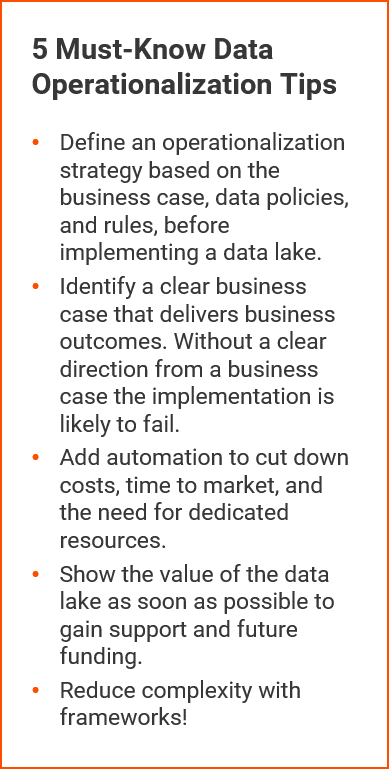

Data Lakes have complex architectures and their successful operation is often hampered through misguided focus during delivery. The implementations of data lakes regularly go awry when they focus too much on technological capabilities, functionalities and utilizing the cutting edge instead of focusing on the business case and requirements that need to be fulfilled to obtain true success. The design of the operational model needs to be driven by the business case and related requirements. A strong operationalization design will be dependent on these elements in combination with the data governance and policies.

An organization will therefore need to define the business goals, business use cases, and key requirements to help build a strong foundation for the data lake. When a solid plan is in place, enterprises can avoid changing strategies midway through the data lake lifecycle.

Organizations will realize the return on their investment in the data lake once it supports improved operations, new products and services, and enhanced customer experiences. Reaching these data-driven improvements is a struggle and finding the right framework and operationalization model will lead to significant value for the business.

Data Lakes have complex architectures and their successful operation is often hampered through misguided focus during delivery. The implementations of data lakes regularly go awry when they focus too much on technological capabilities, functionalities and utilizing the cutting edge instead of focusing on the business case and requirements that need to be fulfilled to obtain true success. The design of the operational model needs to be driven by the business case and related requirements. A strong operationalization design will be dependent on these elements in combination with the data governance and policies.

An organization will therefore need to define the business goals, business use cases, and key requirements to help build a strong foundation for the data lake. When a solid plan is in place, enterprises can avoid changing strategies midway through the data lake lifecycle.

Questions to Help Develop an Operations Strategy

Operationalization decisions must be made about the layers, concepts, and components regarding design and implementation. Business use cases and goals will inform the choices and design needs. Specifically, these decisions will be based on business, user, and technical requirements.

When defining an operationalization plan, you will need to outline requirements for data, including:

Security (informed by data governance policies)

- What are your data zones?

- Does certain data need to be encrypted at rest?

- Does data need to be encrypted in motion?

- How will you authenticate users?

Authorization

- Will you authorize users through more traditional role-based (RBAC) authorization?

- Will you authorize users through attribute-based (ABAC) authorization? E.g. through metadata attributes

- Or a combination of role and authorization?

Availability (Service level agreements and quality of service)

- What is the volume of data that will be available?

- What are the data load patterns?

- How many concurrent users will be allowed?

- What are the SLA’s (promised response times, etc.)?

Other questions to address:

- What are the data zones, where is the data located, and for what goal?

- Is the data confidential? Will data have different levels of confidentiality based on data governance policies?

- Is the data arriving to the data lake by stream or batch?

- How often is the data refreshed?

- Is the data structured, unstructured, or a combination?

- What are the folder structures and naming conventions in the data lake for?

- Hive databases

- File names

- Folder name and structure

- Zone names

Many of the data lake operationalization decisions will be dependent on data lake governance decisions. In planning both, define data management on the lake regarding:

- Usability (relevant)

- Availability

- Confidentiality

- integrity (quality and lineage)

- security / access

Likewise, automating portions of the data lake operationalization depends on how data governance is set up for:

- procedures and policies

- rules

- governing body (owner)

- implementation (through capability driving tools EDC, AXON, etc.)

- Embedded into the design of the data lake

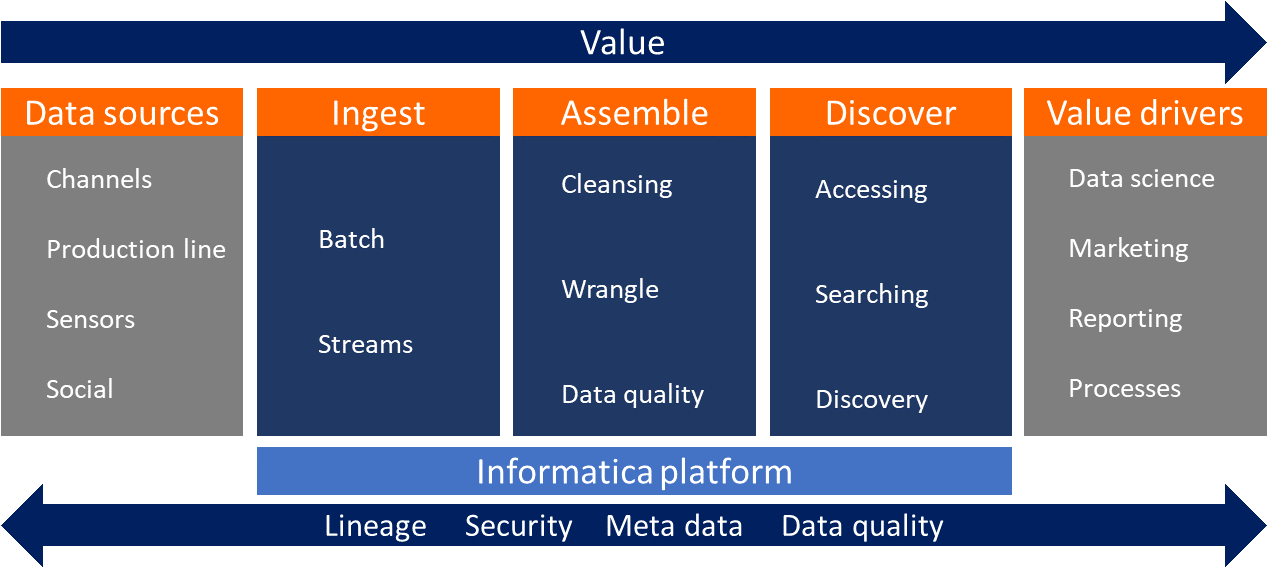

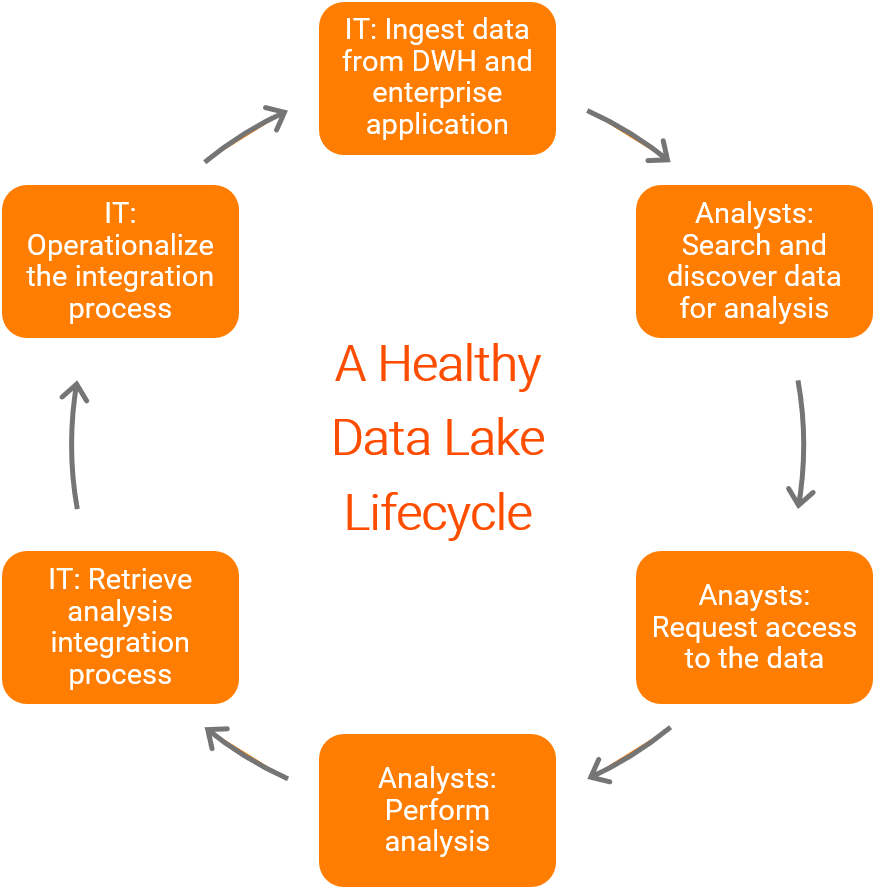

Data Lake Operations

After the data lake infrastructure and design is implemented, IT and users will work with different business units to identify and prioritize the data sources. The next step is to begin ingesting, or loading the data, into the data lake. From here, the integration process in migrated from the development environment to the QA/Test environment and then to production. Once the data analysts have access to the different datasets, they can cleanse, transform, combine and aggregate the datasets. One-off analysis can be saved and reused at a later data. Recurring analysis are passed to IT to operationalize. The data structures, data objects, and mappings from the analysis are imported into the lake development environment and optimized based on the organization’s standards and best practices. Once complete, the process is production ready.

Typical work includes:

Data on the lake should maintain lineage and meta data should be generated through discovery. Additionally, business terms should be linked to data domains, and data profiles will need to be assigned to data objects. The language chosen for these naming conventions and classifications is critical to ensuring the users can find and leverage the data.

Accelerating Business through Data Operationalization

Organizations that operationalize their data lakes can gain insight on their enterprise data more quickly. Operationalization ensures efficiencies and reuse of existing data through automation. Informatica has helped organizations reach better efficiency, project alignment, regulatory compliance, and a more simplified data landscape through data operationalization. As businesses approach digital transformation and require more agility in their highly competitive markets, they are bringing data operationalization to their data lakes and realizing that

Business analysists and data scientists can easily put business data to work for the business. They will have the needed permissions for easy and automatic access to high-quality data through attribute-based access control (ABAC) and will no longer have to wait for manual data preparation.

Democratizing data makes it infinitely more valuable. Good governance ensures that anyone in the business is able to access the data they need easily and quickly and begin realizing data-driven value.

Informatica and Data Operationalization

Informatica data lake solutions enable business and IT to leverage and operationalize their data to drive value. With Informatica Data Engineering Integration (DEI), organizations can achieve faster, more flexible, and repeatable data ingestion and integration on Hadoop, including:

- Access to data for the consumer

- Data integration on Hadoop and Spark

- Ability to parse complex, multi-structured, hierarchical, and unstructured data

- Mass ingestion from different source systems and applications into the cloud, on premise, or a combination of both.

Are you ready to get started operationalizing your data? Get started here:

{fa-link } Operationalize and Scale Data Engineering Integration Projects

{fa-link } Data Lakes for the Enterprise

{fa-link } The CDO’s Guide to Intelligent Data Lake Management

Professional Services Offerings

{fa-link } Data Engineering Integration Adoption Success Pack

The Informatica portfolio of Intelligent Data Engineering products accelerates your ability to ingest, prepare, catalog, master, govern, and protect your big data to deliver successful data lakes to make business decisions based on new, accurate, and consistent insights. Informatica’s Professional Services organization helps ensure success leveraging these products on your enterprise Data Engineering Integration strategy.

{fa-link } Data Security Discovery

A standardized engagement provides the technical foundation as well as the basic Data Security solution knowledge that customers will need to ensure maximum benefit in the shortest amount of time. The goal is to set the customer towards detecting and protecting sensitive data in their environments. This offering provides a powerful platform to identify, analyze, detect, and monitor sensitive data risks.

{fa-link } Technical Architecture Consulting

Informatica’s Technical Architecture Managers are highly skilled consultants focusing on ensuring success. With deep and broad experience building, designing and implementing Data lakes utilizing Informatica and cloud services, the technical architecture manager will maximize value within your organization.