Challenge

A holistic 360 degree view of Enterprise Digital Assets is critical in managing modern business environment as companies find themselves with multiple redundant systems that contain master data built on differing data models and data definitions. The challenge in data governance is orchestrating people, policies, procedures, and technology to manage enterprise data availability, usability, integrity, and security for business processes.

Master Data Management (MDM) is the controlled process by which the master data can be created and maintained as the system of record for the enterprise. Master data management addresses these major data challenges in the modern business environment:

- Creating a cross-enterprise perspective of enterprise data assets for better business intelligence and clear understanding of the myriad data entities that exist across the organization

- Validated as correct, consistent, and complete to provide enhance data reliability and data maintenance procedures across customer records for improved transaction management.

- Published in context for consumption by internal or external business processes, applications, or users.

- Ability to provide data governance at the enterprise level with tight controls over data maintenance processes and best practices, and secure access to the usage of data.

- A requirement to coexist with existing information technology infrastructure

Description

A logical view of the MDM Hub, the data flow through the Hub, and the physical architecture of the Hub are described in the following sections.

Logical View

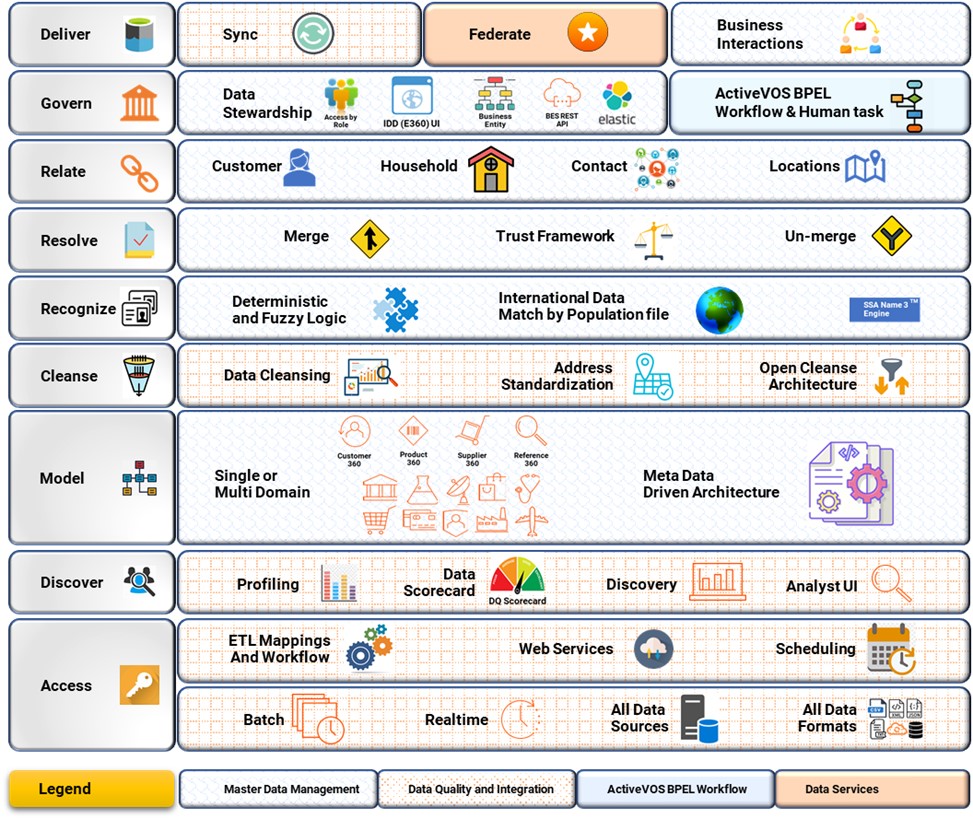

A logical view of the MDM Hub is shown below:

The Hub supports access to data integration in the form of batch, real-time and/or asynchronous messaging. Typically, this access is supported through a combination of data integration tools, such as Informatica Data Quality or Power Center for batch processing and through Business Entity Services Rest APIs from real-time integrations.

In order to master the data in the hub optimally, the source data profiling needs to be conducted to ensure that the anomalies in meta data and business data are identified and appropriate data cleansing and validation mitigation are implemented. This analysis typically takes place with Informatica Data Quality. Data cleansing rules need to be centralized in Informatica data quality and used across MDM integrations and User interface for all cleansing and validation needs. A basic version Informatica data quality software with bundled with MDM software to handle basic requirements related to MDM use case, for advanced needs a separate instance of DQ with proper sizing will be required.

The role of the Hub is to master data for one or more domains within a Customer’s environment. In the MDM Hub, there is a significant amount of metadata maintained in order to support data mastering functionality, such as lineage, history, survivorship and the like. The MDM Hub data model is completely flexible and can start from a Customers existing model, and industry standard model, or a model may be created from scratch.

Once the data model has been defined, data needs to be cleansed and standardized. The MDM Hub has an open architecture which allows a Customer to define Native Cleanse routines or use Cleanse Libraries defined as webservice in Informatica Data Quality.

Data is then matched in the system using a combination of deterministic and fuzzy matching. Informatica SSA Name 3TM is the underlying match technology in the Hub, and the interfaces to it have been optimized for Hub use and the interfaces have been abstracted such that they are easily used by business users.

After matching has been performed, the Hub consolidates records by linking them together to produce a registry of related records or by merging them to produce a Golden Record or a Best Version of the Truth (BVT). When a BVT is produced, survivorship rules defined in the MDM trust framework are applied such that the preferred attributes from the contributing source records are promoted into the BVT.

The BVT provides a basis for identifying and managing relationships across entities and sources. By building on top of the BVT, the MDM Hub can expose relationships which are cross source or cross entity and are not visible within an individual source.

The Business Entity Framework can be used to develop composite business data objects, called Business Entities, based on the underlying domain data model. Business entities serve as foundational objects for developing the Entity 360 User interface and enables the Business Entity Services APIs framework for Realtime inbound and outbound integration using REST and SOAP protocols. This provides consistent behavior of cleansing, validation and maintenance of business data from E360 UI or through real-time calls.

A data governance framework is provided to data stewards through Entity 360 Interface. The E360 framework leverages Business entity objects defined on the data model to provide end to end views of master data. The E360 framework is highly configurable to suit wide range of business requirements. With the Business entity based User interface, there are no restrictions on the number of child levels that could be created for a single entity, thus allowing a true representation of the business data structure.

Additional information that is related to an entity, available internally MDM or from external sources, can be provided in the user interface through component configurations that add additional context to the entity. With Data-As-A-Service (DAAS) Integration capability users can enrich their entity data with external data providers such as D&B in real-time.

E360 is also integrated with the Elastic Search engine to provide text-based search on business entity data stored in MDM. The searchable attributes and filters on search results are configurable and provides an efficient way to locate data within MDM.

E360 provides highly evolved Task management functionality driven by the ActiveVOS BPEL workflow engine that is fully integrated with MDM. ActiveVOS software is provided with the MDM software and comes with six out-of-the-box workflows that address common collaboration and approval workflow use cases. These can be customized, or custom workflows can be created from scratch, to allow customers to apply robust data governance control on MDM data. Additional licensing of the ActiveVOS Designer tool is needed for workflow customizations.

There is an underlying security framework within the MDM Hub that provides fine grained controls of access to data within the Hub. This framework supports configuration of the security policies locally, or by consuming them from external sources, based on a customer's desired infrastructure. This provides the data access controls across all data touch points exposed by MDM.

Data Flow

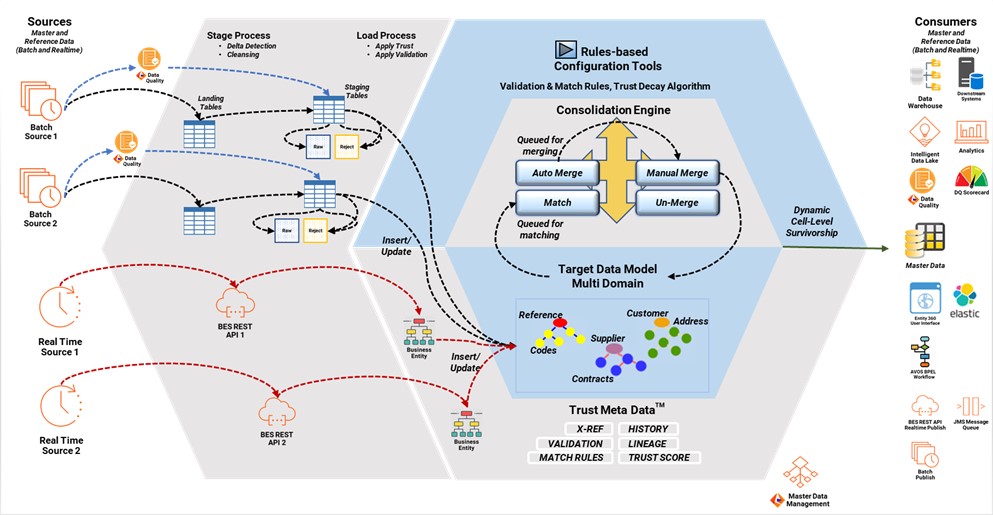

A typical data flow through the Hub is shown below

Batch Processes

Implementations of MDM start by defining the data model into which all of the data will be consolidated. This target data model will contain the BVT and the associated metadata that supports it. Source data is brought into the hub by putting it into a set of Landing Tables. A Landing Table is a representation of the data source in the general form of the source. There is an equivalent table known as a Staging Table, which represents the source data, but in the format of the Target Data model. Therefore, data needs to be transformed from the Landing Table to the Staging table, and this happens within the MDM Hub as follows:

- Incoming data is run through a Delta Detection process to determine if it has changed since the last time it was processed. Only records that have changed are processed.

- Records are run through a staging process which transforms the data to the form of the Target Model. The staging process is a mapping within the MDM Hub that performs any number of standardization, cleansing or transformation processes. The mappings also allow for external cleanse engines to be invoked.

- Records are then loaded into the staging table. The pre-cleansed version of the records are stored in a RAW table, and records which are inappropriate to stage (for example, they have structural deficiencies such as a duplicate PKEY) are written to a REJECT table to be manually corrected at a later time.

Alternatively, the Landing tables could be bypassed and data loaded directly into the Staging tables by the ETL process. As the data is extracted from the source system, data cleansing and standardization must be performed before the data is loaded to the Staging tables. Any delta detection or structural problems (like duplicate PKEYS) must also be handled by the ETL processes. Data loaded directly into the Staging tables must be ready to load directly into the Base tables with no further changes.

The data in the Staging Table is then loaded into the Base Objects. This process first applies a trust score to attributes for which it has been defined. Trust scores represent the relative survivorship of an attribute and are calculated at the time the record is loaded, based on the currency of the data, the data source, and other characteristics of the attribute.

Records are then pushed through a matching process which generates a set of candidates for merging. Depending on which match rules caused a record to match, the record will be queued either for automatic merging or for manual merging. Records that do not match will be loaded into the Base Object as unique records. Records queued for automatic merge will be processed by the Hub without human intervention; those queued for manual merge will be displayed to a Data Steward for further processing.

All data in the hub is available for consumption as a batch, as a set of outbound asynchronous messages or through a real-time services interface.

Real-time Processes

With Business Entity Services the Source system can be directly updated in MDM from in real-time synchronously. These APIs use REST and SOAP protocols with JSON or XML payloads and are enabled on the underlying business entity structure. The Business entity composite data structure allows for create and update of one or more Entities and its child records in a single call.

Data publication through BES provides the entire data structure of the one or more business entity as response in a single call with its child objects. The BES APIs accept multiple filters as part of the request and can return data in any granularity as needed by calling applications.

Physical Architecture

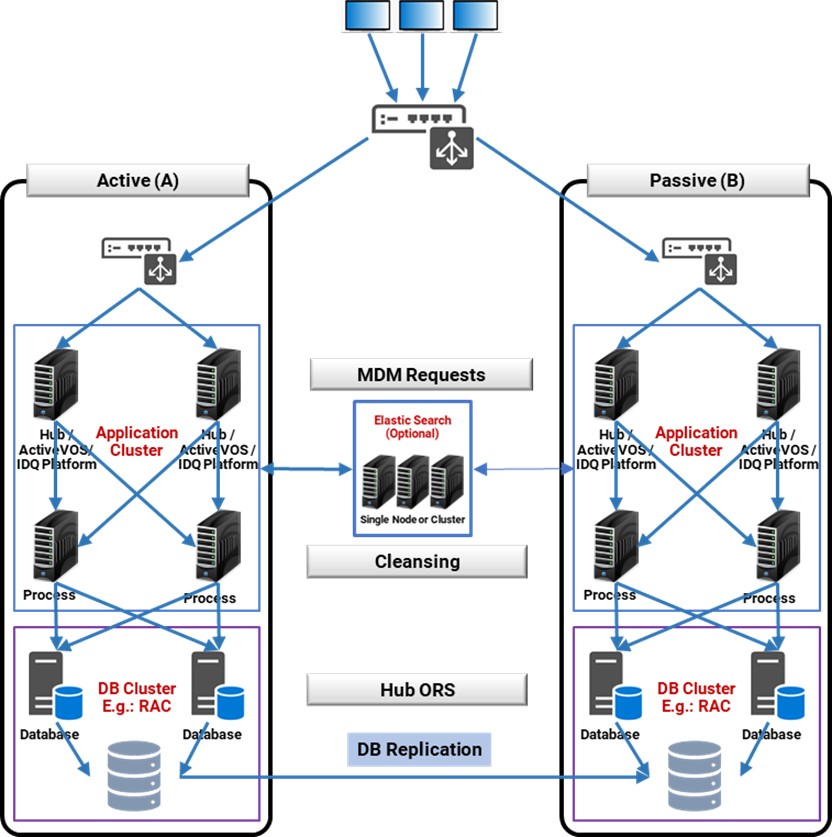

The MDM Hub is designed as a three-tiered architecture. These tiers consist of the MDM Hub Store, the MDM Hub Server(s) (includes Process Servers) and the MDM User Interface.

The Hub Store is where business data is stored and consolidated. The Hub Store contains common information about all of the databases that are part of an MDM Hub implementation. It resides in a supported database server environment. The Hub Server is the run-time component that manages core and common services for the MDM Hub. The Hub Server is a J2EE application, deployed on a supported application server that orchestrates the data processing within the Hub Store, as well as integration with external applications. Refer to the latest Product Availability Matrix for which versions of databases, application servers, and operating systems are currently supported by the MDM Hub.

The Hub may be implemented in either a standard architecture or in a high availability architecture. In order to achieve high availability, Informatica recommends the configuration shown below:

Refer to MDM_10x_InfrastructurePlanningGuide_xx.pdf provided as part of MDM documentation for additional details on Installation and Deployment Considerations.

The Hub implementation can be optionally setup to work with the below software that are open source or provided as part of MDM.

Elastic Search – Open Source

The elastic search server is recommended to be deployed in a sperate application server and used its own Tomcat application server

Informatica Platform – Informatica Platform provided as part of MDM

For enabling basic Informatica Data quality functions if no standalone DQ installation is available with the customer. Advanced DQ functions require additional licensing.

ActiveVOS – BPEL Workflow Server Software provided as part of MDM

Integrated as part of MDM and is the primary workflow engine supported for Business Process Management (BPM).

This configuration employs a properly sized DB server and application server(s). The DB server is configured as multiple DB cluster nodes. The database is distributed in SAN architecture. The application server requires sufficient file space to support efficient match batch group sizes. Refer to the MDM Sizing Guidelines to properly size each of these tiers.

Data base redundancy is provided through the use of the database cluster, and application server redundancy is provided through application server clustering.

To support geographic distribution, the HA architecture described above is replicated in a second node, with failover provided using a log replication approach. This configuration is intended to support Hot/Warm or Hot/Cold environments but does not support Hot/Hot operation.