-

Success

Manage your Success Plans and Engagements, gain key insights into your implementation journey, and collaborate with your CSMsSuccessAccelerate your Purchase to Value engaging with Informatica Architects for Customer SuccessAll your Engagements at one place

-

Communities

A collaborative platform to connect and grow with like-minded Informaticans across the globeCommunitiesConnect and collaborate with Informatica experts and championsHave a question? Start a Discussion and get immediate answers you are looking forCustomer-organized groups that meet online and in-person. Join today to network, share ideas, and get tips on how to get the most out of Informatica

-

Knowledge Center

Troubleshooting documents, product guides, how to videos, best practices, and moreKnowledge CenterOne-stop self-service portal for solutions, FAQs, Whitepapers, How Tos, Videos, and moreVideo channel for step-by-step instructions to use our products, best practices, troubleshooting tips, and much moreInformation library of the latest product documentsBest practices and use cases from the Implementation team

-

Learn

Rich resources to help you leverage full capabilities of our productsLearnRole-based training programs for the best ROIGet certified on Informatica products. Free, Foundation, or ProfessionalFree and unlimited modules based on your expertise level and journeySelf-guided, intuitive experience platform for outcome-focused product capabilities and use cases

-

Resources

Library of content to help you leverage the best of Informatica productsResourcesMost popular webinars on product architecture, best practices, and moreProduct Availability Matrix statements of Informatica productsMonthly support newsletterInformatica Support Guide and Statements, Quick Start Guides, and Cloud Product Description ScheduleEnd of Life statements of Informatica products

- Velocity

- Strategy

-

Solutions

-

Stages

Following a rigorous methodology is key to delivering customer satisfaction and expanding analytics use cases across the business.

-

More

-

Success

Manage your Success Plans and Engagements, gain key insights into your implementation journey, and collaborate with your CSMsAccelerate your Purchase to Value engaging with Informatica Architects for Customer SuccessAll your Engagements at one place

-

Communities

A collaborative platform to connect and grow with like-minded Informaticans across the globeConnect and collaborate with Informatica experts and championsHave a question? Start a Discussion and get immediate answers you are looking forCustomer-organized groups that meet online and in-person. Join today to network, share ideas, and get tips on how to get the most out of Informatica

-

Knowledge Center

Troubleshooting documents, product guides, how to videos, best practices, and moreOne-stop self-service portal for solutions, FAQs, Whitepapers, How Tos, Videos, and moreVideo channel for step-by-step instructions to use our products, best practices, troubleshooting tips, and much moreInformation library of the latest product documentsBest practices and use cases from the Implementation team

-

Learn

Rich resources to help you leverage full capabilities of our productsRole-based training programs for the best ROIGet certified on Informatica products. Free, Foundation, or ProfessionalFree and unlimited modules based on your expertise level and journeySelf-guided, intuitive experience platform for outcome-focused product capabilities and use cases

-

Resources

Library of content to help you leverage the best of Informatica productsMost popular webinars on product architecture, best practices, and moreProduct Availability Matrix statements of Informatica productsMonthly support newsletterInformatica Support Guide and Statements, Quick Start Guides, and Cloud Product Description ScheduleEnd of Life statements of Informatica products

-

Success

PowerExchange CDC Failover for Linux and UNIX

Cloud Data Warehouse & Data Lake

Challenge

In today’s world, businesses expect their production applications and supporting databases to be available 24/7. In order to minimize any unplanned outages, many companies have implemented the following for their databases in the Linux, UNIX, and Windows environments.

- Oracle: Real Application Clusters (RAC) and/or Oracle Data Guard

- SQL Server: Always-on Availability Groups

For the PowerCenter environment, they often will also implement PowerCenter Grid with High Availability (HA). With these precautions implemented, the failover is automatic with little to no disruption in service.

Unfortunately, PowerExchange does not provide an HA component. When Informatica Professional Services (IPS) is involved on a project that involves PowerExchange Change Data Capture (CDC), IPS will recommend an architecture that will provide failover capabilities, but the failover process will be a manual one. A script can always be created to automate this failover process if the PowerExchange primary or backup node becomes unavailable. It is important to minimize the amount of time that the PowerExchange Listeners and Loggers are not running.

Description

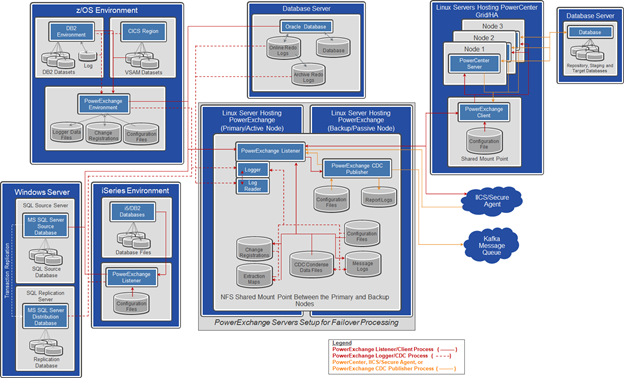

The image below depicts a failover architecture that can be implemented for PowerExchange CDC. This architecture has two Linux servers, with an NFS shared mount point between each server. The PowerExchange environment resides on the NFS shared mount point and is hosting multiple PowerExchange Listeners/Loggers for Oracle and SQL Server. In addition, it is also hosting remote PowerExchange Listeners/Loggers for z/OS and i5/OS. If the PowerExchange CDC Publisher is being used, it can be placed on the same NFS shared mount point as PowerExchange CDC so that it also has failover capabilities.

Besides providing support for failover, another benefit of this failover architecture is that it will allow maintenance to be done on one of the Linux PowerExchange servers while the other continues to support the environment. Often, sites will implement this architecture and flip-flop between the primary server and backup server on a monthly basis.

Implementing the NFS shared mount point between the primary and backup server nodes, for the PowerExchange Listeners and Loggers, requires the following.

- Use Linux physical or virtual servers to reduce the potential of stale reads. Do not use Windows servers because of the problems associated with Windows shared drives.

- The NFS shared mount point needs to be visible to the primary and backup Linux servers.

- The speed of the NFS shared mount point between the two Linux servers should be relatively fast. Either NFS3 or NFS4 is acceptable for the mount point.

- To reduce the likelihood of stale reads, include the “noac” and “actimeo=0” attributes on the Linux mount command.

In this architecture, all of the PowerExchange binaries, configuration files, CDC change registration files, CDC extraction map files and CDC data files reside on the NFS shared mount point between the two Linux servers. Only the .bash_profile will need to be updated on each of the Linux servers.

Failover planning should also be done for the PowerExchange default dbmover.cfg configuration file and the PowerExchange Client dbmover.cfg configuration file being used by PowerCenter. The IP address or DNS name for each of the Linux servers hosting the PowerExchange Listeners and Loggers will be different. The PowerExchange default dbmover.cfg file and PowerExchange Client dbmover.cfg file should contain a NODE= statement for the primary server node and backup server node. The location name of the Listener should be the same in both of these NODE= statements. For PowerCenter, this eliminates the need to edit the Location attribute in the PowerCenter connections. Only one of these NODE= statements should be active and the other NODE= statement should be commented out as shown in the example below.

/* Primary Server Node for the Oracle Listener

NODE=(Oracle_Listener,TCPIP,123.456.789.012,2480)

/* Backup Server Node for the Oracle Listener

/*NODE=(Oracle_Listener,TCPIP,456.789.012.345,2480)

/* Primary Server Node for the SQL Server Listener

NODE=(MSSQL_Listener,TCPIP,123.456.789.012,2481)

/* Backup Server Node for the SQL Server Listener

/*NODE=(MSSQL_Listener,TCPIP,456.789.012.345,2481)

This will minimize the changes that need to be done in the PowerCenter Server environment when the primary Linux PowerExchange server becomes unavailable and the backup Linux PowerExchange server is activated.

If the primary Linux PowerExchange server becomes unavailable, all of the PowerExchange Listeners and Loggers will stop running. In addition, any of the PowerCenter CDC workflows using these PowerExchange Listeners will also stop along with any IICS CDC workflow tasks and the PowerExchange CDC Publisher processes. If the PowerCenter session properties are set up correctly so that advanced GMD recovery is in effect, any of the uncommitted updates to the target database will be rolled back. For more information on these settings, review Chapter 6 – Restart and Recovery in the PowerExchange Interfaces for PowerCenter manual.

When the primary Linux server node fails, the following steps will need to be completed to get the PowerExchange Listeners, PowerExchange Loggers, PowerCenter CDC workflows, IICS CDC workflow tasks, and PowerExchange CDC Publisher processes restarted.

- Start the PowerExchange Loggers on the backup Linux server and monitor the Logger messages being generated, for a period of time, to ensure that they are running properly.

- Start the PowerExchange Listeners on the backup Linux server and monitor the Listener messages being generated, for a period of time, to ensure that they are running properly.

- If PowerCenter is being used, edit the PowerExchange Client dbmover.cfg configuration file being used by PowerCenter. Comment out the NODE= statements for the primary server node and uncomment the NODE= statements for the backup server node. The edited version of these NODE= statements should look like the example below.

Primary Server Node for the Oracle Listener

/*NODE=(Oracle_Listener,TCPIP,123.456.789.012,2480)

/* Backup Server Node for the Oracle Listener

NODE=(Oracle_Listener,TCPIP,456.789.012.345,2480)

/* Primary Server Node for the SQL Server Listener

/*NODE=(MSSQL_Listener,TCPIP,123.456.789.012,2481)

/* Backup Server Node for the SQL Server Listener

NODE=(MSSQL_Listener,TCPIP,456.789.012.345,2481) - If PowerCenter is being used, restart the PowerCenter CDC workflows. The PowerCenter CDC workflows will use the restart tokens that were generated when the CDC workflows stopped. Monitor the session log messages being generated for a period of time to ensure that they are running properly.

- If IICS is being used, edit the connections being used so that the Listener Location reflects the IP address or DNS name for the backup PowerExchange server along with the port assignment.

- If IICS is being used, restart the IICS CDC workflow tasks. The IICS CDC workflow tasks will use the restart tokens that were generated when the workflow tasks stopped. Monitor the workflow task messages being generated for a period of time to ensure that they are running properly.

- If the PowerExchange CDC Publisher is being used, edit the PowerExchange default dbmover.cfg configuration file located in the $PWX_HOME directory. Comment out the NODE= statements for the primary server node and uncomment the NODE= statements for the backup server node. The edited version of these NODE= statements should look like the example below.

/* Primary Server Node for the Oracle Listener

/*NODE=(Oracle_Listener,TCPIP,123.456.789.012,2480)

/* Backup Server Node for the Oracle Listener

NODE=(Oracle_Listener,TCPIP,456.789.012.345,2480)

/* Primary Server Node for the SQL Server Listener

/*NODE=(MSSQL_Listener,TCPIP,123.456.789.012,2481)

/* Backup Server Node for the SQL Server Listener

NODE=(MSSQL_Listener,TCPIP,456.789.012.345,2481)

- If PowerExchange CDC Publisher is being used, restart the PowerExchange CDC Publisher process. The PowerExchange CDC Publisher will use the checkpoint file located in the $PWXPUB_HOME/instance/checkpoint directory that was generated when the PowerExchange CDC Publisher stopped.

When implementing this type of PowerExchange failover architecture it is always advisable to get Informatica Professional Services involved. The IPS resource will be able to implement this architecture and ensure that it will function correctly with minimal impact to the PowerExchange, PowerCenter, IICS, and PowerExchange CDC Publisher environments.