Challenge

Understanding how parameters, variables, and parameter files work and using them for maximum efficiency.

Description

Prior to the release of PowerCenter 5, the only variables inherent to the product were defined to specific transformations and to those server variables that were global in nature. Transformation variables were defined as variable ports in a transformation and could only be used in that specific transformation object (e.g., Expression, Aggregator, and Rank transformations). Similarly, global parameters defined within Server Manager would affect the subdirectories for source files, target files, log files, and so forth.

More current versions of PowerCenter made variables and parameters available across the entire mapping rather than for a specific transformation object. In addition, they provide built-in parameters for use within Workflow Manager. Using parameter files, these values can change from session-run to session-run. With the addition of workflows, parameters can now be passed to every session contained in the workflow, providing more flexibility and reducing parameter file maintenance. Other important functionality that has been added in recent releases is the ability to dynamically create parameter files that can be used in the next session in a workflow or in other workflows.

Parameters and Variables

Use a parameter file to define the values for parameters and variables used in a workflow, worklet, mapping, or session. A parameter file can be created using a text editor such as WordPad or Notepad. List the parameters or variables and their values in the parameter file. Parameter files can contain the following types of parameters and variables:

- Workflow variables

- Worklet variables

- Session parameters

- Mapping parameters and variables

When using parameters or variables in a workflow, worklet, mapping, or session, the Integration Service checks the parameter file to determine the start value of the parameter or variable. Use a parameter file to initialize workflow variables, worklet variables, mapping parameters, and mapping variables. If not defining start values for these parameters and variables, the Integration Service checks for the start value of the parameter or variable in other places.

Session parameters must be defined in a parameter file. Because session parameters do not have default values, if the Integration Service cannot locate the value of a session parameter in the parameter file, it fails to initialize the session. To include parameter or variable information for more than one workflow, worklet, or session in a single parameter file, create separate sections for each object within the parameter file.

Also, create multiple parameter files for a single workflow, worklet, or session and change the file that these tasks use, as necessary. To specify the parameter file that the Integration Service uses with a workflow, worklet, or session, do either of the following:

- Enter the parameter file name and directory in the workflow, worklet, or session properties.

- Start the workflow, worklet, or session using pmcmd and enter the parameter filename and directory in the command line.

If entering a parameter file name and directory in the workflow, worklet, or session properties and in the pmcmd command line, the Integration Service uses the information entered in the pmcmd command line.

Parameter File Format

When entering values in a parameter file, precede the entries with a heading that identifies the workflow, worklet or session whose parameters and variables are to be assigned. Assign individual parameters and variables directly below this heading, entering each parameter or variable on a new line. List parameters and variables in any order for each task.

The following heading formats can be defined:

- Workflow variables - [folder name.WF:workflow name]

- Worklet variables -[folder name.WF:workflow name.WT:worklet name]

- Worklet variables in nested worklets - [folder name.WF:workflow name.WT:worklet name.WT:worklet name...]

- Session parameters, plus mapping parameters and variables - [folder name.WF:workflow name.ST:session name] or [folder name.session name] or [session name]

Below each heading, define parameter and variable values as follows:

- parameter name=value

- parameter2 name=value

- variable name=value

- variable2 name=value

For example, a session in the production folder, s_MonthlyCalculations, uses a string mapping parameter, $$State, that needs to be set to MA, and a datetime mapping variable, $$Time. $$Time already has an initial value of 9/30/2000 00:00:00 saved in the repository, but this value needs to be overridden to 10/1/2000 00:00:00. The session also uses session parameters to connect to source files and target databases, as well as to write session log to the appropriate session log file. The following table shows the parameters and variables that can be defined in the parameter file:

| Parameter and Variable Type | Parameter and Variable Name | Desired Definition |

| String Mapping Parameter | $$State | MA |

| Datetime Mapping Variable | $$Time | 10/1/2000 00:00:00 |

| Source File (Session Parameter) | $InputFile1 | Sales.txt |

| Database Connection (Session Parameter) | $DBConnection_Target |

Sales (database connection) |

| Session Log File (Session Parameter) | $PMSessionLogFile | d:/session logs/firstrun.txt |

The parameter file for the session includes the folder and session name, as well as each parameter and variable:

- [Production.s_MonthlyCalculations]

- $$State=MA

- $$Time=10/1/2000 00:00:00

- $InputFile1=sales.txt

- $DBConnection_target=sales

- $PMSessionLogFile=D:/session logs/firstrun.txt

The next time the session runs, edit the parameter file to change the state to MD and delete the $$Time variable. This allows the Integration Service to use the value for the variable that was set in the previous session run

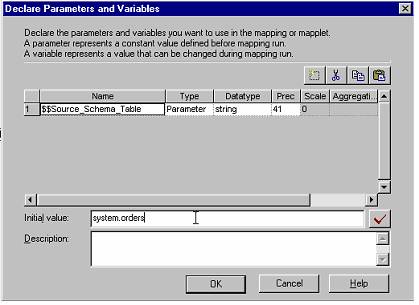

Mapping Variables

Declare mapping variables in PowerCenter Designer using the menu option Mappings -> Parameters and Variables (See the first figure, below). After selecting mapping variables, use the pop-up window to create a variable by specifying its name, data type, initial value, aggregation type, precision, and scale. This is similar to creating a port in most transformations (See the second figure, below).

Variables, by definition, are objects that can change value dynamically. PowerCenter has four functions to affect change to mapping variables:

- SetVariable

- SetMaxVariable

- SetMinVariable

- SetCountVariable

A mapping variable can store the last value from a session run in the repository to be used as the starting value for the next session run.

- Name. The name of the variable should be descriptive and be preceded by $$ (so that it is easily identifiable as a variable). A typical variable name is: $$Procedure_Start_Date.

- Aggregation type. This entry creates specific functionality for the variable and determines how it stores data. For example, with an aggregation type of Max, the value stored in the repository at the end of each session run would be the maximum value across ALL records until the value is deleted.

- Initial value. This value is used during the first session run when there is no corresponding and overriding parameter file. This value is also used if the stored repository value is deleted. If no initial value is identified, then a data-type specific default value is used.

Variable values are not stored in the repository when the session:

- Fails to complete.

- Is configured for a test load.

- Is a debug session.

- Runs in debug mode and is configured to discard session output.

Order of Evaluation

The start value is the value of the variable at the start of the session. The start value can be a value defined in the parameter file for the variable, a value saved in the repository from the previous run of the session, a user-defined initial value for the variable, or the default value based on the variable data type. The Integration Service looks for the start value in the following order:

- Value in session parameter file

- Value saved in the repository

- Initial value

- Default value

Mapping Parameters and Variables

Since parameter values do not change over the course of the session run, the value used is based on:

- Value in session parameter file

- Initial value

- Default value

Once defined, mapping parameters and variables can be used in the Expression Editor section of the following transformations:

- Expression

- Filter

- Router

- Update Strategy

- Aggregator

Mapping parameters and variables also can be used within the Source Qualifier in the SQL query, user-defined join, and source filter sections, as well as in a SQL override in the lookup transformation.

Guidelines for Creating Parameter Files

Use the following guidelines when creating parameter files:

- Enter folder names for non-unique session names. When a session name exists more than once in a repository, enter the folder name to indicate the location of the session.

- Create one or more parameter files. Assign parameter files to workflows, worklets, and sessions individually. Specify the same parameter file for all of these tasks or create several parameter files.

- If including parameter and variable information for more than one session in the file, create a new section for each session. The folder name is optional.

[folder_name.session_name]

parameter_name=value

variable_name=value

mapplet_name.parameter_name=value

[folder2_name.session_name]

parameter_name=value

variable_name=value

mapplet_name.parameter_name=value

- Specify headings in any order. Place headings in any order in the parameter file. However, if defining the same parameter or variable more than once in the file, the Integration Service assigns the parameter or variable value using the first instance of the parameter or variable.

- Specify parameters and variables in any order. Below each heading, the parameters and variables can be specified in any order.

- When defining parameter values, do not use unnecessary line breaks or spaces. The Integration Service may interpret additional spaces as part of the value.

- List all necessary mapping parameters and variables. Values entered for mapping parameters and variables become the start value for parameters and variables in a mapping. Mapping parameter and variable names are not case sensitive.

- List all session parameters. Session parameters do not have default values. An undefined session parameter can cause the session to fail. Session parameter names are not case sensitive.

- Use correct date formats for datetime values. When entering datetime values, use the following date formats:

MM/DD/RR

MM/DD/RR HH24:MI:SS

MM/DD/YYYY

MM/DD/YYYY HH24:MI:SS

- Do not enclose parameters or variables in quotes. The Integration Service interprets everything after the equal sign as part of the value.

- Do enclose parameters in single quotes. In a Source Qualifier SQL Override use single quotes if the parameter represents a string or date/time value to be used in the SQL Override.

- Precede parameters and variables created in mapplets with the mapplet name as follows:

mapplet_name.parameter_name=value

mapplet2_name.variable_name=value

Sample: Parameter Files and Session Parameters

Parameter files, along with session parameters, allow you to change certain values between sessions. A commonly-used feature is the ability to create user-defined database connection session parameters to reuse sessions for different relational sources or targets. Use session parameters in the session properties, and then define the parameters in a parameter file. To do this, name all database connection session parameters with the prefix $DBConnection, followed by any alphanumeric and underscore characters. Session parameters and parameter files help reduce the overhead of creating multiple mappings when only certain attributes of a mapping need to be changed.

Using Parameters in Source Qualifiers

Another commonly used feature is the ability to create parameters in the source qualifiers, which allows you to reuse the same mapping, with different sessions, to extract specified data from the parameter files the session references. Moreover, there may be a time when it is necessary to create a mapping that will create a parameter file and the second mapping to use that parameter file created from the first mapping. The second mapping pulls the data using a parameter in the Source Qualifier transformation, which reads the parameter from the parameter file created in the first mapping. In the first case, the idea is to build a mapping that creates the flat file, which is a parameter file for another session to use.

Sample: Variables and Parameters in an Incremental Strategy

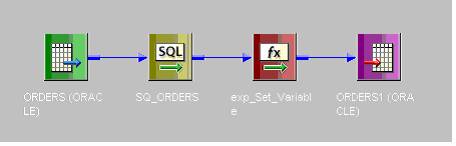

Variables and parameters can enhance incremental strategies. The following example uses a mapping variable, an expression transformation object, and a parameter file for restarting.

Scenario

Company X wants to start with an initial load of all data, but wants subsequent process runs to select only new information. The environment data has an inherent Post_Date that is defined within a column named Date_Entered that can be used. The process will run once every twenty-four hours.

Sample Solution

Create a mapping with source and target objects. From the menu create a new mapping variable named $$Post_Date with the following attributes:

- TYPE Variable

- DATATYPE Date/Time

- AGGREGATION TYPE MAX

- INITIAL VALUE 01/01/1900

Note that there is no need to encapsulate the INITIAL VALUE with quotation marks. However, if this value is used within the Source Qualifier SQL, it may be necessary to use native RDBMS functions to convert (e.g., TO DATE(--,--)). Within the Source Qualifier Transformation, use the following in the Source_Filter Attribute: DATE_ENTERED > to_Date(' $$Post_Date','MM/DD/YYYY HH24:MI:SS') [please be aware that this sample refers to Oracle as the source RDBMS]. Also note that the initial value 01/01/1900 will be expanded by the Integration Service to 01/01/1900 00:00:00, hence the need to convert the parameter to a datetime.

The next step is to forward $$Post_Date and Date_Entered to an Expression transformation. This is where the function for setting the variable will reside. An output port named Post_Date is created with a data type of date/time. In the expression code section, place the following function:

SETMAXVARIABLE($$Post_Date,DATE_ENTERED)

The function evaluates each value for DATE_ENTERED and updates the variable with the Max value to be passed forward. For example:

DATE_ENTERED |

Resultant POST_DATE |

9/1/2000 |

9/1/2000 |

10/30/2001 |

10/30/2001 |

9/2/2000 |

10/30/2001 |

Consider the following with regard to the functionality:

- In order for the function to assign a value, and ultimately store it in the repository, the port must be connected to a downstream object. It need not go to the target, but it must go to another Expression Transformation. The reason is that the memory will not be instantiated unless it is used in a downstream transformation object.

- In order for the function to work correctly, the rows have to be marked for insert. If the mapping is an update-only mapping (i.e., Treat Rows As is set to Update in the session properties) the function will not work. In this case, make the session Data Driven and add an Update Strategy after the transformation containing the SETMAXVARIABLE function, but before the Target.

- If the intent is to store the original Date_Entered per row and not the evaluated date value, then add an ORDER BY clause to the Source Qualifier. This way, the dates are processed and set in order and data is preserved.

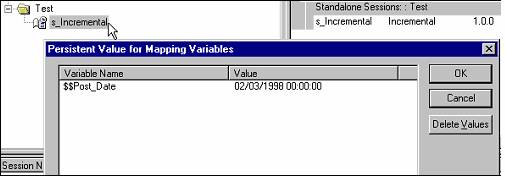

The first time this mapping is run, the SQL will select from the source where Date_Entered is > 01/01/1900 providing an initial load. As data flows through the mapping, the variable gets updated to the Max Date_Entered it encounters. Upon successful completion of the session, the variable is updated in the repository for use in the next session run. To view the current value for a particular variable associated with the session, right-click on the session in the Workflow Monitor and choose View Persistent Values.

The following graphic shows that after the initial run, the Max Date_Entered was 02/03/1998. The next time this session is run, based on the variable in the Source Qualifier Filter, only sources where Date_Entered > 02/03/1998 will be processed.

Resetting or Overriding Persistent Values

To reset the persistent value to the initial value declared in the mapping, view the persistent value from Workflow Manager (see graphic above) and press Delete Values. This deletes the stored value from the repository, causing the Order of Evaluation to use the Initial Value declared from the mapping.

If a session run is needed for a specific date, use a parameter file. There are two basic ways to accomplish this:

- Create a generic parameter file, place it on the server, and point all sessions to that parameter file. A session may (or may not) have a variable, and the parameter file need not have variables and parameters defined for every session using the parameter file. To override the variable, either change, uncomment, or delete the variable in the parameter file.

- Run pmcmd for that session, but declare the specific parameter file within the pmcmd command.

Configuring the Parameter File Location

Specify the parameter filename and directory in the workflow or session properties. To enter a parameter file in the workflow or session properties:

- Select either the Workflow or Session, choose, Edit, and click the Properties tab.

- Enter the parameter directory and name in the Parameter Filename field.

- Enter either a direct path or a server variable directory. Use the appropriate delimiter for the Integration Service operating system.

The following graphic shows the parameter filename and location specified in the session task.

The next graphic shows the parameter filename and location specified in the Workflow.

In this example, after the initial session is run, the parameter file contents may look like:

[Test.s_Incremental]

;$$Post_Date=

By using the semicolon, the variable override is ignored and the Initial Value or Stored Value is used. If, in the subsequent run, the data processing date needs to be set to a specific date (for example: 04/21/2001), then a simple Perl script or manual change can update the parameter file to:

[Test.s_Incremental]

$$Post_Date=04/21/2001

Upon running the sessions, the order of evaluation looks to the parameter file first, sees a valid variable and value and uses that value for the session run. After successful completion, run another script to reset the parameter file.

Sample: Using Session and Mapping Parameters in Multiple Database Environments

Reusable mappings that can source a common table definition across multiple databases, regardless of differing environmental definitions (e.g., instances, schemas, user/logins), are required in a multiple database environment.

Scenario

Company X maintains five Oracle database instances. All instances have a common table definition for sales orders, but each instance has a unique instance name, schema, and login.

DB Instance |

Schema |

Table |

User |

Password |

ORC1 |

aardso |

orders |

Sam |

max |

ORC99 |

environ |

orders |

Help |

me |

HALC |

hitme |

order_done |

Hi |

Lois |

UGLY |

snakepit |

orders |

Punch |

Judy |

GORF |

gmer |

orders |

Brer |

Rabbit |

Each sales order table has a different name, but the same definition:

ORDER_ID |

NUMBER (28) |

NOT NULL, |

DATE_ENTERED |

DATE |

NOT NULL, |

DATE_PROMISED |

DATE |

NOT NULL, |

DATE_SHIPPED |

DATE |

NOT NULL, |

EMPLOYEE_ID |

NUMBER (28) |

NOT NULL, |

CUSTOMER_ID |

NUMBER (28) |

NOT NULL, |

SALES_TAX_RATE |

NUMBER (5,4) |

NOT NULL, |

STORE_ID |

NUMBER (28) |

NOT NULL |

Sample Solution

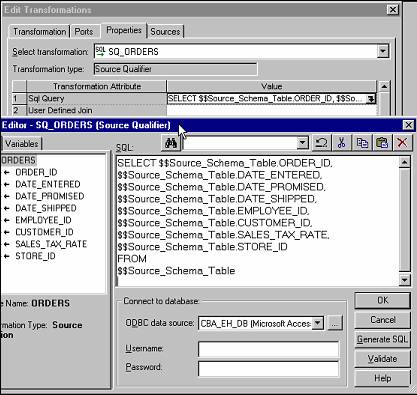

Using Workflow Manager, create multiple relational connections. In this example, the strings are named according to the DB Instance name. Using Designer, create the mapping that sources the commonly defined table. Then create a Mapping Parameter named $$Source_Schema_Table with the following attributes:

Note that the parameter attributes vary based on the specific environment. Also, the initial value is not required since this solution uses parameter files.

Open the Source Qualifier and use the mapping parameter in the SQL Override as shown in the following graphic.

Open the Expression Editor and select Generate SQL. The generated SQL statement shows the columns. Override the table names in the SQL statement with the mapping parameter.

Using Workflow Manager, create a session based on this mapping. Within the Source Database connection drop-down box, choose the following parameter:

$DBConnection_Source

Point the target to the corresponding target and finish.

Now create the parameter files. In this example, there are five separate parameter files.

Parmfile1.txt

[Test.s_Incremental_SOURCE_CHANGES]

$$Source_Schema_Table=aardso.orders

$DBConnection_Source= ORC1

Parmfile2.txt

[Test.s_Incremental_SOURCE_CHANGES]

$$Source_Schema_Table=environ.orders

$DBConnection_Source= ORC99

Parmfile3.txt

[Test.s_Incremental_SOURCE_CHANGES]

$$Source_Schema_Table=hitme.order_done

$DBConnection_Source= HALC

Parmfile4.txt

[Test.s_Incremental_SOURCE_CHANGES]

$$Source_Schema_Table=snakepit.orders

$DBConnection_Source= UGLY

Parmfile5.txt

[Test.s_Incremental_SOURCE_CHANGES]

$$Source_Schema_Table= gmer.orders

$DBConnection_Source= GORF

Use pmcmd to run the five sessions in parallel. The syntax for pmcmd for starting sessions with a particular parameter file is as follows:

pmcmd startworkflow -s serveraddress:portno -u Username -p Password -paramfile parmfilename s_Incremental

You may also use "-pv pwdvariable" if the named environment variable contains the encrypted form of the actual password.

Notes on Using Parameter Files with Startworkflow

When starting a workflow, you can optionally enter the directory and name of a parameter file. The PowerCenter Integration Service runs the workflow using the parameters in the file specified. For UNIX shell users, enclose the parameter file name in single quotes:

-paramfile '$PMRootDir/myfile.txt'

For Windows command prompt users, the parameter file name cannot have beginning or trailing spaces. If the name includes spaces, enclose the file name in double quotes:

-paramfile "$PMRootDir\my file.txt"

Note: When writing a pmcmd command that includes a parameter file located on another machine, use the backslash (\) with the dollar sign ($). This ensures that the machine where the variable is defined expands the server variable.

pmcmd startworkflow -uv USERNAME -pv PASSWORD -s SALES:6258 -f east -w wSalesAvg -paramfile '\$PMRootDir/myfile.txt'

In the event that it is necessary to run the same workflow with different parameter files, use the following five separate commands:

pmcmd startworkflow -u tech_user -p pwd -s 127.0.0.1:4001 -f Test s_Incremental_SOURCE_CHANGES -paramfile \$PMRootDir\ParmFiles\Parmfile1.txt 1 1

pmcmd startworkflow -u tech_user -p pwd -s 127.0.0.1:4001 -f Test s_Incremental_SOURCE_CHANGES -paramfile \$PMRootDir\ParmFiles\Parmfile2.txt 1 1

pmcmd startworkflow -u tech_user -p pwd -s 127.0.0.1:4001 -f Test s_Incremental_SOURCE_CHANGES -paramfile \$PMRootDir\ParmFiles\Parmfile3.txt 1 1

pmcmd startworkflow -u tech_user -p pwd -s 127.0.0.1:4001 -f Test s_Incremental_SOURCE_CHANGES -paramfile \$PMRootDir\ParmFiles\Parmfile4.txt 1 1

pmcmd startworkflow -u tech_user -p pwd -s 127.0.0.1:4001 -f Test s_Incremental_SOURCE_CHANGES -paramfile \$PMRootDir\ParmFiles\Parmfile5.txt 1 1

Alternatively, run the sessions in sequence with one parameter file. In this case, a pre- or post-session script can change the parameter file for the next session.

Dynamically creating Parameter Files with a mapping

Using advanced techniques a PowerCenter mapping can be built that produces as a target file a parameter file (.parm) that can be referenced by other mappings and sessions. When many mappings use the same parameter file it is desirable to be able to easily re-create the file when mapping parameters are changed or updated. This also can be beneficial when parameters change from run to run. There are a few different methods of creating a parameter file with a mapping.

There is a mapping template example on the my.informatica.com that illustrates a method of using a PowerCenter mapping to source from a process table containing mapping parameters and to create a parameter file. This same feat can be accomplished also by sourcing a flat file in a parameter file format with code characters in the fields to be altered.

[folder_name.session_name]

parameter_name= <parameter_code>

variable_name=value

mapplet_name.parameter_name=value

[folder2_name.session_name]

parameter_name= <parameter_code>

variable_name=value

mapplet_name.parameter_name=value

In place of the text <parameter_code> one could place the text filename_<timestamp>.dat. The mapping would then perform a string replace wherever the text <timestamp> occurred and the output might look like:

Src_File_Name= filename_20080622.dat

This method works well when values change often and parameter groupings utilize different parameter sets. The overall benefits of using this method are such that if many mappings use the same parameter file, changes can be made by updating the source table and recreating the file. Using this process is faster than manually updating the file line by line.

Final Tips for Parameters and Parameter Files

Use a single parameter file to group parameter information for related sessions.

When sessions are likely to use the same database connection or directory, you might want to include them in the same parameter file. When connections or directories change, you can update information for all sessions by editing one parameter file. Sometimes you reuse session parameters in a cycle. For example, you might run a session against a sales database everyday, but run the same session against sales and marketing databases once a week. You can create separate parameter files for each session run. Instead of changing the parameter file in the session properties each time you run the weekly session, use pmcmd to specify the parameter file to use when you start the session.

Use reject file and session log parameters in conjunction with target file or target database connection parameters.

When you use a target file or target database connection parameter with a session, you can keep track of reject files by using a reject file parameter. You can also use the session log parameter to write the session log to the target machine.

Use a resource to verify the session runs on a node that has access to the parameter file.

In the Administration Console, you can define a file resource for each node that has access to the parameter file and configure the Integration Service to check resources. Then, edit the session that uses the parameter file and assign the resource. When you run the workflow, the Integration Service runs the session with the required resource on a node that has the resource available.

Save all parameter files in one of the process variable directories.

If you keep all parameter files in one of the process variable directories, such as $SourceFileDir, use the process variable in the session property sheet. If you need to move the source and parameter files at a later date, you can update all sessions by changing the process variable to point to the new directory.