-

Manage your Success Plans and Engagements, gain key insights into your implementation journey, and collaborate with your CSMsSuccessAccelerate your Purchase to Value by engaging with Informatica for Customer SuccessAll your Engagements at one place

-

A collaborative platform to connect and grow with like-minded Informaticans across the globeCommunitiesConnect and collaborate with Informatica experts and championsHave a question? Start a Discussion and get immediate answers you are looking forCustomer-organized groups that meet online and in-person. Join today to network, share ideas, and get tips on how to get the most out of Informatica

-

Troubleshooting documents, product guides, how to videos, best practices, and moreKnowledge CenterOne-stop self-service portal for solutions, FAQs, Whitepapers, How Tos, Videos, and moreVideo channel for step-by-step instructions to use our products, best practices, troubleshooting tips, and much moreInformation library of the latest product documentsBest practices and use cases from the Implementation team

-

Rich resources to help you leverage full capabilities of our productsLearnRole-based training programs for the best ROIGet certified on Informatica products. Free, Foundation, or ProfessionalFree and unlimited modules based on your expertise level and journeySelf-guided, intuitive experience platform for outcome-focused product capabilities and use cases

-

Library of content to help you leverage the best of Informatica productsResourcesMost popular webinars on product architecture, best practices, and moreProduct Availability Matrix statements of Informatica productsMonthly support newsletterInformatica Support Guide and Statements, Quick Start Guides, and Cloud Product Description ScheduleEnd of Life statements of Informatica productsMonitor the status of your Informatica services across regions

- Upgrade Planner 10.5.x

- Enterprise Data Catalog

The following are the new features and enhancements in Enterprise Data Catalog (EDC) v10.5.2:

User Experience

- Display asset description and composite data domains on the Search Results page

- Enhanced search pre-filter with custom attribute sorting

- Axon Business Terms - Key Data Element (KDE) or Critical Data Element (CDE) enhancements:

- The KDE icon is added to the following sections to quickly identify KDE Axon Business Terms: On assets, In Search results, In Related Glossaries, In Association

- The KDE icon is shown for Enterprise Data Catalog assets where KDE Business Terms are associated

- The KDE attribute can be filtered

- Show Related Logical Assets on the Axon Glossary, Dataset, and System Overview page

- Show Related Technical Assets on the Axon Attribute Overview page

- Show the asset path in the following:

- Business Term to asset association dialog box

- Show All tabular view in the Relationships tab

- Display asset documented foreign keys information on the Keys and Relationships tabs

- Enhance the Salesforce scanner V1 resource asset page to show data domains, basic profiling statistics, and source and inferred data types

Deployment

- Support Log4j 2.17.1

- Reverse proxy and load balancing support for Enterprise Data Catalog web applications

- Email notifications on Resource Job Task failure

- Support external PostgreSQL DB for similarity datastore in Informatica Cluster Service configuration

- Support multi-disk partition, H/A failover SSL for external PostgreSQL DB for similarity datastore to improve performance

- New DAA Dashboard API to extract OOTB dashboard metrics and chart information

- New "accessFilter" GET API to fetch the privileges for specific resources and specific users

Scanner and Discovery Enhancements

New Advanced Scanner

- Azure SQL Data Warehouse - Azure Synapse Analytics (SQL Pool) - Stored Procedures

Scanner and Discovery Enhancements

- Support thresholds for inference and auto acceptance of smart data domains

- Enable Business Term Association for Tableau

- Data profiling on Databricks cluster on AWS

- Acceptance threshold while propagating "Data Domains" to similar columns

- Enhanced Import and Export features:

- Bulk certification

- Details of data match conformance scores and metadata match in exports

- Option to export data type

- Enhanced Avro file support in Amazon S3 scanner V1, ADLS Gen2 scanner V1, and HDFS scanner:

- Support for Avro complex data type, multi-hierarchy file

- Partitioned file detection (strict mode only)

- Profiling in native and Spark mode

- Upper limit for Random % Sampling in Google BigQuery

- Google BigQuery profiling for reserved keywords

- Regex based directory filtering for File system; S3 scanner V2 and ADLS scanner V2

- Unique Key Inference for Azure SQL Database and IBM DB2/ZOS

Effective in version 10.5.2, Informatica includes the following functionality for technical preview:

Scanners and Discovery

New Standard Scanners

Informatica Cloud Data Integration (IICS) metadex scanner V2 (Technical Preview):

- Parameter file support

- Filter support at project, folder, and task levels

- Mapping tasks, PowerCenter tasks, synchronization tasks

Salesforce metadex scanner V2 (Technical Preview)

- Include metadata extraction for Salesforce Sales Cloud and Service Cloud

- Objects, views, triggers, asset descriptions, SDFC API v52 support, user/password and OAuth authentication, connection through proxy support, and metadata filters

For more information, see the MetaDex Scanner Configuration Guide.

Amazon S3 scanner V2 (Technical Preview) and ADLS GEN-2 scanner V2 (Technical Preview):

- Schema merge of nested schema

- Partition pruning. Find the latest partition to identify the schema and for data discovery

- Partition detection for Parquet and Avro files

- Custom or non-Hive-style partitioning support

- Incremental scan

- Exclusion filters for directories

For more information, see the Enterprise Data Catalog Scanner Configuration Guide.

Technical preview functionality is supported for evaluation purposes but is unwarranted and not supported in production environments or any environment you plan to push to production. Informatica intends to include the preview functionality in an upcoming release for production use but might choose not to per changing market or technical circumstances. For more information, contact Informatica Global Customer Support.

Changes

MetaDex Component Names Updated

Effective in version 10.5.2, Informatica updated the component names within the MetaDex framework to better reflect the usage.

The following table lists updates to component names:

| Previous name |

Updated name |

| Advanced Scanners tool | MetaDex tool |

| Advanced Scanner | MetaDex scanner |

| Advanced Scanners server | MetaDex server |

| Advanced Scanners repository | MetaDex repository |

For more information about the update and usage, see the FAQs on the name update and usage of MetaDex scanners Knowledge Base (KB) article.

Dropped Support

Resources

Effective in version 10.5.2, Enterprise Data Catalog dropped support for the following resource versions that were lower than 10.2:

- Informatica Platform

- PowerCenter

- Informatica Data Quality

Product Availability Matrix (PAM)

Informatica's products rely upon and interact with an extensive range of products supplied by third-party vendors. Examples include database systems, ERP applications, web and application servers, browsers, and operating systems. Support for significant third-party product releases is determined and published in Informatica's Product Availability Matrix (PAM).

The PAM states which third-party product release is supported in combination with a specified Version of an Informatica product.

Here's the PAM for Informatica 10.5.2

PAM updates

- Scanners

- Informatica Data Quality 10.5.2

- Informatica Platform 10.5.2

- Informatica PowerCenter 10.5.2

- Business Glossary 10.5.2

- Oracle 21c

- Teradata 17

- Tableau 2021

- EMR 6.4

- Connection through proxy for ADSL, Amazon S3, Azure SQL Database, Azure SQL Data Warehouse, Sharepoint, Google BigQuery

- Profiling

- Certification of AWS Databricks

- Partitioned parquet files on Amazon S3

- Delta tables on AWS Databricks

- Deployment

- Support for Microsoft Edge based on Chromium

- Added support for RHEL 8.5

- Added support for Oracle Linux 8.5 - RHCK Kernel only

- Added support for Suse Linux 15 SP3

- Added support for Oracle 21c to install Enterprise Data Catalog

To obtain the installers download link, contact Informatica Shipping Team.

Find the list of required installers below for EDC v10.5.2:

Installer |

Notes |

|

informatica_1052_server_linux-x64.tar |

Required |

|

ScannerBinaries.zip |

Required |

|

informatica_1052_EDC_ClusterValidationUtility.zip |

Required |

To validate the Cluster before the upgrade |

informatica_1052_daa_utility.zip |

Optional |

Required only when DAA is enabled. |

GenerateCustomSslUtility.zip |

Optional |

Required if you are not using Self-Signed Certificates. Also, if you have a custom SSL certificate for the Informatica domain, but you want to use default SSL certificates as the client and cluster certificates for the Informatica Cluster Service. |

CustomSSLScriptsUtil_ExternalCA.zip |

Optional |

Required if you are using CA Signed Certificates. If you have a custom SSL certificate for the Informatica domain and plan to configure a custom SSL for Enterprise Data Catalog. |

Informatica_1052_TableauEnrichmentMigrationUtility.zip |

Optional |

Required only when Tableau enrichments are used. |

Informatica_1052_PkFkEnrichmentMigrationUtility.zip |

Optional |

Required only when PK-FK enrichments are used. |

EDC_Agent_1052_Windows.zip |

Optional |

Required only when the following resources are used:

Note: Please ensure that you have the latest version of the agent when upgrading to any target version. |

ExtendedScannerBinaries.zip |

Optional |

Required only when you fetch metadata for Powercenter or Informatica Platform from any of the following versions.

|

PExchange10.5.2.zip |

Optional |

Required only when Powerexchange is used to connect to IBM DB2 for Z/OS |

Informatica_1052_custom-scanner-validator-assembly.zip |

Optional |

|

SAP_Scanner_Binaries.zip |

Optional |

If you use SAP BW, SAP BW/4HANA, and SAP S/4HANA resources |

You can download the installers based on your Operating System type and version.

Note: The Informatica Server is recommended to run on a separate machine(s) from the Informatica Cluster services

It is mandatory to rename the binary file as below.

Rename ScannerBinaries_[OStype].zip to ScannerBinaries.zip. For example, rename ScannerBinaries_SUSE.zip to ScannerBinaries.zip

Ensure that renamed binary is copied to <Extracted_Installer_Directory>/source/ directory before you start the upgrade from 10.4.0 or 10.4.1 or apply Hotfix 2 on 10.5.0 or 10.5.1.

EDC, EDP, and DPM:

- You can apply the 10.5.2 hotfix to versions 10.5 and 10.5.1, including any service pack or cumulative patch.

- For EDC and EDP, you can upgrade to version 10.5.2 from the following previous versions:

- 10.4 including any service pack or cumulative patch

- 10.4.1 including any service pack or cumulative patch

- For DPM, you can upgrade to version 10.5.2 from version 10.4.1.x including any cumulative patch.

Important Notes:

- If Data Engineering, Enterprise Data Catalog, and Enterprise Data Preparation are in the same domain of a version earlier than 10.4, upgrade them to version 10.4 or 10.4.1 before upgrading to 10.5.2.

- If Data Engineering, Enterprise Data Catalog, and Data Privacy Management are in the same domain of a version earlier than 10.4.1.x, upgrade them all to version 10.4.1.x before you upgrade to 10.5.2.

When you upgrade Enterprise Data Catalog from a supported version or apply the hotfix on 10.5, the installer validates if you have backed up the catalog. When you roll back the hotfix, the installer validates if a catalog backup exists.

- If you are currently on Enterprise Data Catalog version 10.5.0 or 10.5.1, follow the steps listed in the Apply the Hotfix to Enterprise Data Catalog Version 10.5.2 section.

- If you are currently on Enterprise Data Catalog version 10.4.0 or 10.4.1 with an Internal/Embedded Cluster, follow the Upgrade Enterprise Data Catalog steps in an Internal Cluster section.

- If you are currently on Enterprise Data Catalog version 10.4.0 or 10.4.1 with an External Cluster, follow the Upgrade Enterprise Data Catalog steps in an External Cluster section.

Note: Click here for Support EOL statements

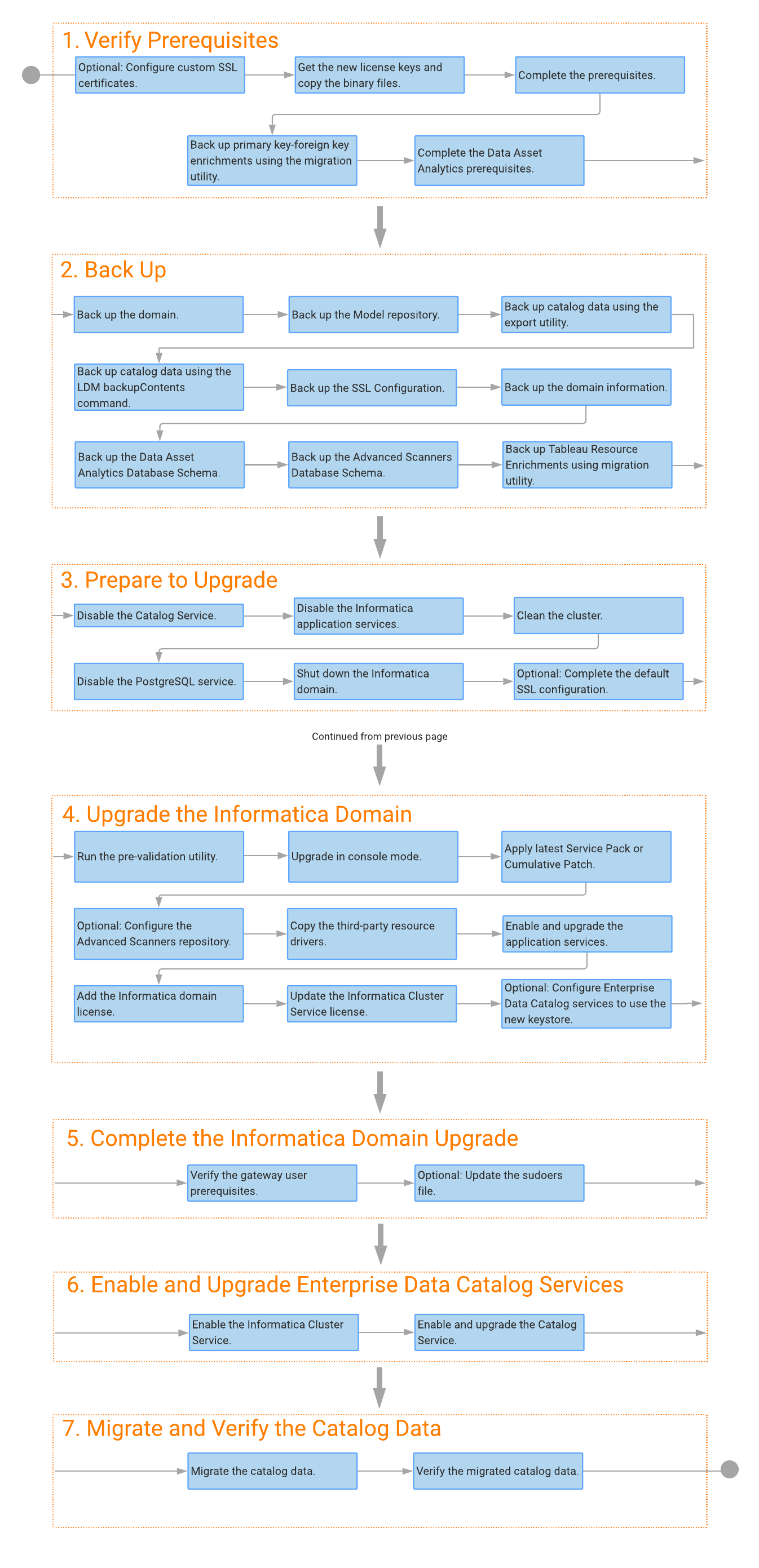

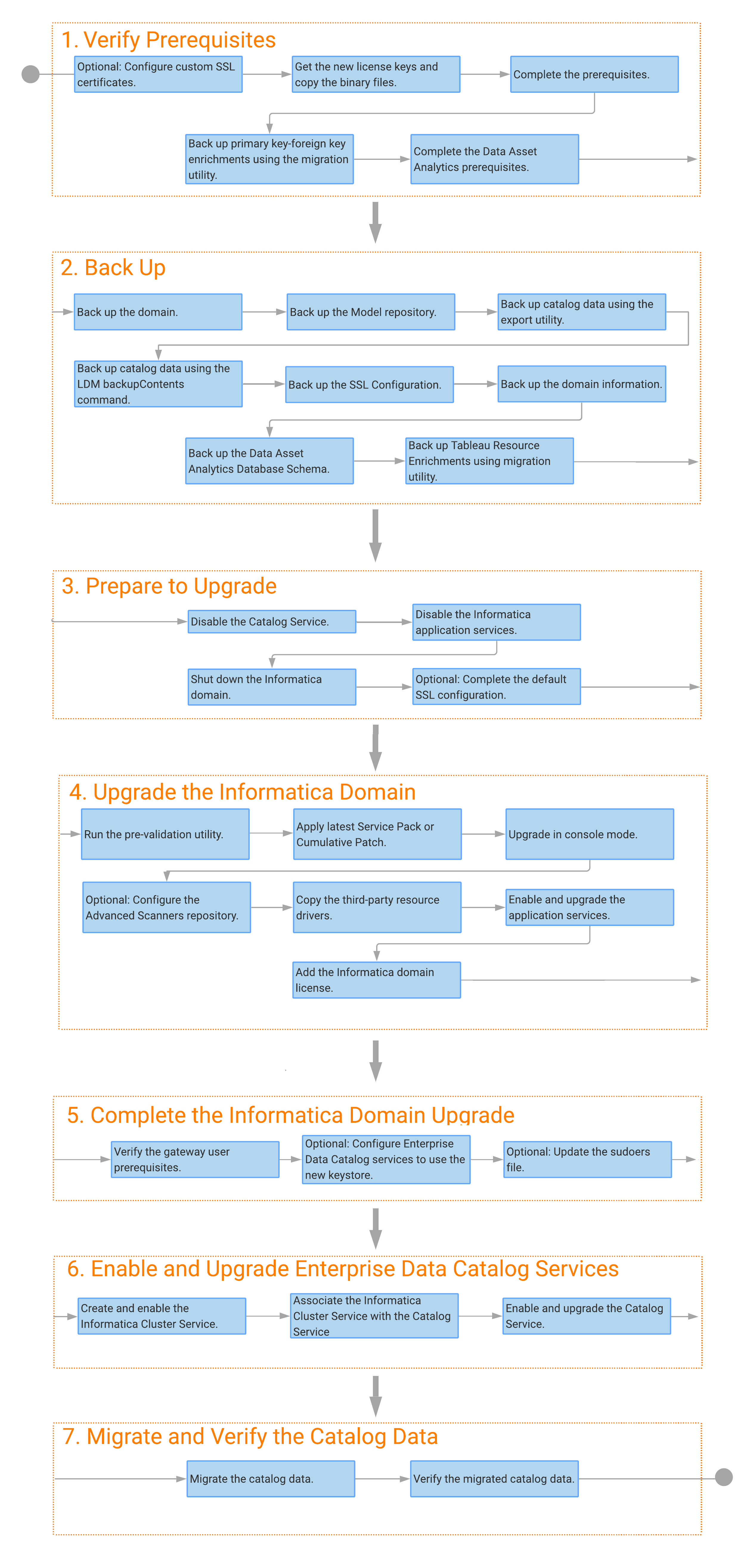

The upgrade checklist summarizes the tasks that you must perform to complete an upgrade.

We can perform either in-place (Inline) upgrade or a parallel upgrade of the domain:

In-place Upgrade: Install 10.5.2 on top of 10.4.x. shutdown 10.4.x and upgrade.

Parallel Upgrade: Clone the 10.4.x to a new machine, set up a new 10.5.2, and have 10.4.x and 10.5.2 run in parallel.

Note: No sharing of cluster hardware between ICS and IHS. This means we cannot run 10.4.x and 10.5.2 concurrently on the same cluster servers.

You can upgrade from EDC deployed on both external and internal clusters to version 10.5.2

The following are the scenarios:

- The external cluster is shared with other products (both INFA and 3rd-party). We expect the customer to provide clean cluster nodes, procure new hardware or decommission some cluster nodes from external clusters and re-purpose the hardware.

- The external cluster is dedicated to Catalog Service, and the steps are documented. When you upgrade from an external cluster, you can plan to deploy EDC on one, three, or six nodes in a cluster.

- Internal cluster: We own all the cluster nodes and the steps are documented

The following ports need to open between Informatica Domain nodes and from Informatica node to EDC Cluster nodes:

Informatica Platform |

||||

Port Description |

Port (HTTP) |

Port (HTTPS) |

External Ports |

URL |

Node port |

6005 |

|||

Service Manager port |

6006 |

|||

Service Manager shutdown port |

6007 |

|||

Informatica Administrator port |

6008 |

8443 |

Yes |

http://<Informatica Node>:6008 (for each informatica node) |

Informatica Administrator shutdown port |

6009 |

|||

Range of dynamic ports for application services |

6014 to 6114 |

|||

Analyst Service |

8085 |

8086 |

Yes |

http://<Informatica Node>: 8085 /Analyst (for each Informatica node) |

Content Management Service |

8105 |

8106 |

||

Data Integration Service |

8095 |

8096 |

||

Catalog Service |

9085 |

9086 |

Yes |

http://<Informatica Node>:9085 (for each informatica node) |

Informatica Cluster Service |

9075,8080, 8088, 8090 |

9076 |

http://<Informatica Node>:9085 (for each informatica node) |

|

Advanced Scanner Port (Required only in case of Advanced Scanner set up) |

48090 |

Yes |

http://<Informatica Node>:48090 (for each informatica node) |

|

Port |

Port Type |

Bi Directional between all nodes in the Informatica domain and Cluster |

Bi Directional between all cluster nodes and User Web browser |

Description |

URL for User WS |

4648 |

TCP |

Y |

Y |

Nomad Server Port |

|

4647 |

TCP |

Y |

Nomad Client Port |

||

4646 |

TCP |

Y |

Nomad HTTP Port |

http://<Cluster Node>: 4646 (for each cluster node) |

|

2181 |

TCP |

Y |

Zookeeper Port |

||

2888 |

TCP |

Y |

Zookeeper Peer Port |

||

3888 |

TCP |

Y |

Zookeeper Leader Port |

||

8983 |

TCP |

Y |

Y |

Solr HTTP port |

http://<Cluster Node>: 8983 (for each cluster node) |

8993 |

TCP |

Y |

Solr Port |

||

27017 |

TCP |

Y |

MongoDB port if it is not configured as a shard member or a configuration server |

||

27018 |

TCP |

Y |

MongoDB port is configured as a shard member |

||

27019 |

TCP |

Y |

MongoDB port is configured as a configuration server |

||

5432 |

TCP |

Y |

PostgreSQL port |

Before upgrading the domain and server files, complete the following pre-upgrade tasks:

- Log in to the machine with the same user account you used to install the previous version.

- Review the Operating System specific requirements. Review the prerequisites and environment variable configuration.

Unset the following environment variables before you start the Upgrade: INFA_HOME, INFA_DOMAINS_FILE, DISPLAY, JRE_HOME, INFA_TRUSTSTORE, INFA_TRUSTSTORE_PASSWORD

Verify that LD_LIBRARY_PATH does not contain earlier versions of Informatica.

Verify that the PATH environment variables do not contain earlier versions of Informatica.

- Ensure an additional 100 GB of free disk space on the machine where the Informatica domain runs.

- Java Development Kit (JDK) Min 1.8 must be installed.

- Install the following applications and packages on all nodes before you upgrade Enterprise Data Catalog:

- Bash shell

- rsync

- libcurl

- xz-libs

- systemctl

- zip

- unzip

- tar

- wget

- scp

- rpm

- curl

- nslookup

- md5sum

- netstat

- ping

- ifconfig

- cksum

- dnsdomainname

- libncurses5

- OpenSSL version 1.0.1e-30.el6_6.5.x86_64 or later.

Note: OpenSSL 3.0 is not supported.

Verify that the $PATH variable points to the /usr/bin directory to use the correct version of Linux OpenSSL.

In 10.5.1.0.1 and later versions, rsync is used instead of scp for file transfers.

- Verify that ntpd is synchronized between the Informatica domain node and the cluster nodes.

- Verify that you have the new license key for Informatica Cluster Service.

- Copy the 10.5.2 installer binary files from the Akamai download location mentioned in the fulfillment email and extract the files to a directory on the machine where you plan to upgrade EDC.

- Copy the new scanner binaries from the Akamai Download Manager to your installation/source directory.

- Keep a record of the asset count from the catalog, Catalog Administrator, and the Data Asset Analytics dashboard for reference. Also, keep the screenshots of the resources in LDMadmin.

URL to be executed: https://<Catalog Hostname>:<Port>/access/2/catalog/data/search?defaultFacets=false&disableSemanticSearch=true&enableLegacySearch=false&facet=true&facetId=core.classType&facetId=core.resourceName&facetId=core.resourceType&highlight=false&includeRefObjects=false&offset=0&pageSize=1&q=*&tabId=all

This will give a total asset count

Note: In a multi-node domain, upgrade a gateway node before upgrading other nodes.

Before upgrading the domain and server files, complete the following pre-upgrade tasks:

- Verify that the firewall is disabled before starting ICS. Check the firewall status in all the cluster machines using the command service firewalld status.

- For customers with large MRS, 8 GB of heap memory is needed for MRS upgrade. The customer must explicitly increase it to 8GB before triggering Upgrade. We can Increase the MRS heap size in the admin console->MRS->Properties-> Advanced properties-> Maximum Heap Size

If Backup contains more profiling data (Similarity data), the infacmd process needs 4GB of heap. Which can be set using the below Property

export ICMD_JAVA_OPTS=-Xmx4096m

ICMD_JAVA_OPTS environment variable applies to the infacmd command line program

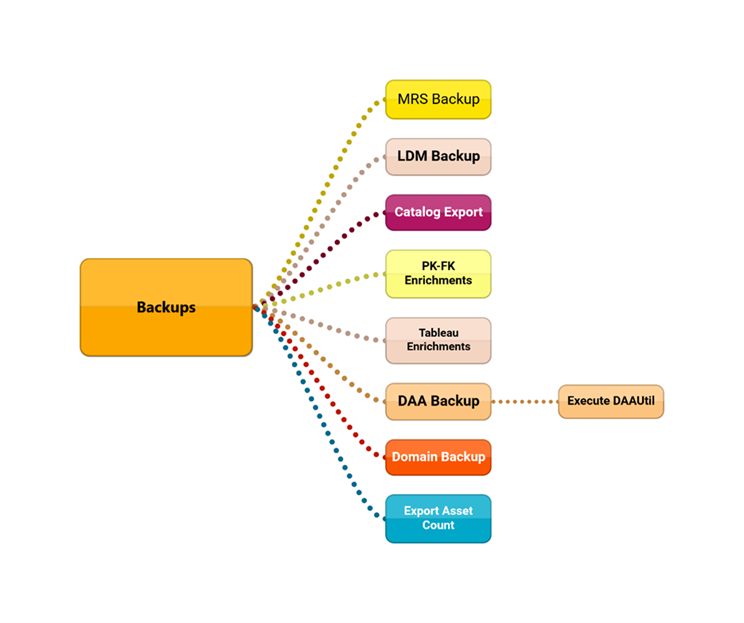

- Verify that you have taken the below backups before you plan to upgrade.

- Domain Backup

- MRS backup

- Back Up Catalog Data Using the Export Utility

- LDM backup using infacmd ldm BackupContents command

- Back up the domain truststore and keystore files

- Backup the default keystore files

- Backup all the keystores and truststores used by different services

- Back up the Sitekey, domains.infa,server.xml,nodemeta.xml files

- It is best practice to take the complete INFA_HOME binaries backup in case of an in-place Upgrade.

- Backup Tableau Resource Enrichments using Migration Utility

- Backup of DAA Database schema

- Back-Up Primary Key-Foreign Key Enrichments Using the Migration Utility

- Back-Up Advanced Scanner/Metadex

- Back-Up Erwin Resource Enrichments Using the Migration Utility

- To take the above Backups, refer to the EDC upgrade guide.

- Effective in version 10.5.1, when you upgrade Enterprise Data Catalog from a supported version or apply the hotfix on 10.5, the installer validates if you have backed up the catalog. When you roll back the hotfix, the installer validates if a catalog backup exists.

- If you deployed a cluster on a multi-node environment, you must perform the following steps before taking the catalog backup:

- Add the Shared File Path property in the Informatica Cluster Service configuration.

- Restart the Informatica Cluster Service.

- Restart the Catalog Service.

- Re-index the catalog.

This NFS directory is used only when taking LDM backup in a multi-node environment.

The NFS path will be empty, and it will populate only when the Backup is taken. And on completion, it will delete the files from the shared file system directory.

Verify the following directory prerequisites:

The directory must be empty.

The directory must have the NFS file system mounted.

The username to access the directory must be the same in all cluster nodes.

The user configured to access the directory must be a non-root user.

- In 10.5.1.0.1 and later versions, rsync is used instead of scp for file transfers.

- In 10.5.1.0.2 and later versions, the MaxStartups parameter in the SSH server configuration file is validated. The maximum number of unauthenticated concurrent SSH connections must be set to a value greater than or equal to 30. To set the value of this parameter to 30, open the /etc/ssh/sshd_config file, change the value of the MaxStartups parameter to 30:30:100, and then restart the sshd daemon.

- If Data Asset Analytics is enabled, complete the Data Asset Analytics Prerequisites given here.

- Configure the Process Termination Timeout

- Run the Data Asset Analytics Utility

- Informatica installation fails on Azure on RHEL 8 with the following error:

<Install_Path>/server/bin/libpmjrepn.so: libidn.so.11: cannot open shared object file: No such file or directory.].

Before running the installer, work with your Linux administrator to install the libidn11 package on the machine where you run the Informatica installation.

Note: Effective in version 10.5.2, the Advanced Scanners tool is renamed the MetaDex tool. The installer panels and the install and upgrade guides still refer to Advanced Scanners.

Here are some of the additional checks to be performed:

- In the multimode Domain, make sure Binaries are copied to all nodes. And that the upgrade is performed on the master gateway node before you upgrade other nodes.

- Make sure to take the catalog service backup using the export utility and command line.

- Rename the binaries zip files and move to <InstallerExtractedDirectory>/source before starting the upgrade. Rename ScannerBinaries_[OStype].zip to ScannerBinaries.zip. For example, rename ScannerBinaries_RHEL.zip to ScannerBinaries.zip

Note: You can contact the Informatica Shipping team to download links for Informatica 10.5.1 server and client installers.

Plan for the Number of Nodes in the ICS Deployment

You can deploy Informatica Cluster Service on a single data node or three or six data nodes to automatically enable the service's high availability. A data node represents a node that runs the applications and services. If you plan to deploy Data Privacy Management with EDC, you can arrange a six-data-node deployment. In a six-node deployment, the nodes are split equally between EDC and Data Privacy Management.

You can configure a maximum of three service instances of Nomad, Apache Solr, and MongoDB to install EDC. You cannot configure multiple instances of the same service on a node.

Note: You cannot configure more than one PostgreSQL database instance.

If the Solr service is deployed on multiple nodes, the Informatica cluster shared file path system must be specified in ICS properties to take the solr backup.

Refer to this document for more information.

A processing node represents a node where profiling jobs or metadata scan jobs run. There are no restrictions on the number of processing nodes that you can configure in the deployment.

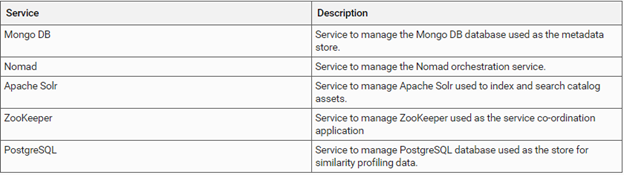

The Informatica Cluster Service uses the following services to run and manage EDC:

Prepare to Configure Custom SSL Certificates

Prepare to Configure Custom SSL Certificates

You can use the default SSL certificates included with the Informatica domain or use SSL certificates of your choice to secure Informatica Cluster Service. If you plan to use SSL certificates of your choice, referred to as custom SSL certificates, review the following scenarios:

Scenario 1. Custom SSL certificate that can sign other certificates.

During install or upgrade, you have a custom SSL certificate for the Informatica domain that you can use to sign other certificates. For scenario 1, you do not need to perform any manual steps.

Scenario 2. Custom SSL certificate that cannot sign other certificates, and you want to generate CA-signed certificates.

You have a custom SSL certificate for the Informatica domain during an install or upgrade, but you cannot use this certificate to sign other certificates. You want to generate CA-signed certificates for the cluster and clients.

Scenario 3. Custom SSL certificates cannot sign other certificates, and you want to use a different set of custom SSL certificates that can sign other certificates.

You have a custom SSL certificate for the Informatica domain during an upgrade, but you cannot use this certificate to sign other certificates. You have a set of other custom SSL certificates that you want to use to sign other certificates.

Refer to the Upgrade Guide for more information.

Please note In Informatica 10.5.0 or higher versions, "Informatica Cluster Service" (ICS) components like "Nomad," "Solr," "PostgreSQL," "Zookeeper," and "MongoDB" would be using "mTLS" (Mutual TLS Authentication).

Due to mTLS requirement, existing CA-signed/Self-signed certificates - infa_keystore.jks / Default.keystore - being used for 'Informatica Node'/'Admin Console' application cannot be reused with ICS components.

Existing CA-signed/Self-signed certificates - infa_keystore.jks / Default.keystore - would continue to work for the Informatica Node process or other tomcat applications like 'EDC'/'EDP'/'DPM'/'Analyst Tool'/'DIS'. However, for the ICS components running in the cluster machines, a different set of certificates would have to be generated using Informatica provided utility.

Make sure these certificates are generated before the upgrade to avoid downtime.

Please refer to the following KB articles for more details:

Upgrading EDC deployed in an internal cluster involves the following steps:

Upgrading EDC deployed on an external cluster involves the following steps:

The steps followed for Preparing Backups:

- Prepare the Domain. Back up the domain and verify database user account permissions.

- Prepare the Model repository, and back up the Model repository.

- Back up the database schema of Data Asset Analytics.

- Back up the Catalog using the export utility: Refer to this article.

- Back-Up the Catalog Using the LDM backupContents Command.

- Note: You must back up the Catalog using both the methods listed to upgrade and restore data successfully or restore data in an upgrade failure event.

- Because of the change from Hadoop to mongo/nomad, we must create a DLM backup in the form of an export folder, which is made using a backup utility that converts the existing 10.4.x Hadoop data into a format that can be imported into 10.5.x. We cannot take a 10.4.1 ldm backupContents and directly restore it to 10.5.x using the restore command. We must use Migrate content command, which takes the export folder as a command-line argument and migrates the catalog content from 10.4.x to 10.5.x.

- Note: Irrespective of the upgrade type, say in-place, parallel or fresh 10.5.x installs and restore the catalog content from 10.4.x, taking the catalog backup using the export utility is mandatory.

- Optional: Take the HDFS backup of Service Cluster Name, which can be used in situations where we cannot follow the usual approach due to any uncertain errors. For more information, refer to HOW TO: Backup EDC

- If you upgrade to a cluster enabled for Kerberos and SSL, back up the domain truststore files.

- If you upgrade to an SSL-enabled cluster, take a backup of the default keystore files.

- If you are using the default SSL certificate to secure the Informatica domain, copy the default.keystore file to a directory that you can access after the upgrade.

- Back up all the keystores and truststores used by different services. Also, back up the Sitekey, domains.infa,server.xml,nodemeta.xml files.

- Additionally, it is always recommended to take the complete INFA_HOME directory backup for fallback purposes.

- Take the backup of Enrichments for tableau resources. Refer to KB article: HOW TO: Backup and Restore Tableau enrichments between EDC versions

- Take the backup of DAA Database schema: Database export or database dump.

- Back-Up Primary key-Foreign key Enrichments Using the Migration Utility. Refer to this document.

- If you have configured the repository for Advanced Scanners, perform a backup of the Advanced Scanners repository database. Refer to the Upgrade guide.

- Back-Up Erwin Resource Enrichments Using the Migration Utility. Refer to this Upgrade Guide.

- Applicable if you have SAP BO Resource and reports created with Universe Design Tool. Refer to this article.

Disabling Application services

- Catalog Service

- Informatica Cluster Service

- Content Management Service

- Data Integration Service

- Model Repository Service

To disable an application service, select the service in Informatica Administrator and click Actions > Disable Service.

Delete the Contents in the Cluster

If you are reprovisioning the Internal Hadoop Cluster: After taking backups, shut down the Hadoop cluster, clean up and re-purpose cluster nodes.

Disable IHS. perform "Clean Cluster."

use infacmd ihs cleanCluster command.

After you run the infacmd ihs cleanCluster command, log in to each cluster node as a non-root user with sudo privileges and delete the contents of the /tmp directory that the yarn user owns.

To delete the contents, run the following command as a non-root user with sudo privileges: find /tmp/ -user yarn -exec rm -fr {} \;

Disable the PostgreSQL Service

Use the following command on the machine where Informatica Administrator runs to disable the PostgreSQL Service: service postgresql-<PostgreSQL version> stop.

For example, if you are using PostgreSQL version 9.6, use the following command: service postgresql-9.6 stop.

ICS will install Postgres 12. It is mandatory to remove all the old postgres instances from the cluster machine if they exist.

Show status of postgresql: systemctl status postgresql

sudo yum remove postgre* => To remove the old versions of Postgres

sudo rm -rf /var/lib/pgsql/* => To remove the data of older postgres versions

Uninstall PostgreSQL Service

Use the following command on the machine where Informatica Administrator runs to uninstall PostgreSQL Service: sudo yum remove postgre* and sudo rm -rf /var/lib/pgsql/*.

Shut Down the Informatica Domain

Shut down the domain. It would help if you shut down the domain before you upgrade. To shut down the domain, stop the Informatica service process on each node in the domain.

Complete the Default SSL Configuration

If the Informatica domain is enabled for SSL using the default SSL certificates, you can configure the Informatica Cluster Service and Catalog Service to use the 10.5. keystore.

To configure the services to use the 10.5.x keystore, perform the following steps:

- Disable the Informatica Cluster Service and the Catalog Service.

- Edit the process options for the Informatica Cluster Service and Catalog Service and provide the location of the 10.5.x Default.keystore file. Default path to the file is $INFA_HOME/tomcat/conf/Default.keystore.

- From the 10.5.x $INFA_HOME/services/shared/security/infa_truststore.jks file, remove the existing value configured for the infa_dflt alias and import the $INFA_HOME/tomcat/conf/Default.keystore file.

- Enable the Informatica Cluster Service and Catalog Service.

Pre validation checks

- Run the Informatica Upgrade Advisor: Informatica provides utilities to facilitate the Informatica services installation process. You can run the utility before you upgrade Informatica services. The Informatica Upgrade Advisor helps validate the services and checks for obsolete services in the domain before performing an upgrade. The Informatica Upgrade Advisor is packaged with the installer. You can select to run the Upgrade advisor.

- Run the Pre-Installation System Check Tool (i10Pi) System Check Tool to verify whether the domain machine meets the system requirements for a fresh installation. This is part of the installer, and we can choose this option while running the installer for a fresh installation.

- Run the ICS pre-validation utility to verify whether the cluster machine meets the system requirements for ICS installation.The utilities are present under <Location of installer files>/properties/utils/prevalidation

To run the utility, edit the input.properties file with appropriate cluster node information.

Then run the below command:

java -jar InformaticaClusterValidationUtility.jar -in <Location of the properties file configured in the prerequisites>

Refer to this document for more information.

Complete the following post requisites after you upgrade the Informatica domain:

- Set the INFA_TRUSTSTORE and INFA_TRUSTSTORE_PASSWORD environment variable values if the domain is enabled for Secure Sockets Layer (SSL).

- Copy the third-party JAR or ZIP files that you had configured for resources such as Teradata, JDBC, and IBM Netezza from the following location <Informatica installation directory>services/CatalogService/ScannerBinaries to the same location in the machine that hosts the upgraded Informatica domain.

- Add the Informatica Domain License.

- Update all the application services with the new license.

- Enable the application services, except the Catalog Service. From Informatica Administrator, enable the following application services and upgrade them:

- Model Repository Service

- Data Integration Service

- Content Management Service

- Verify the Gateway User Prerequisites: The gateway user must be a non-root user with sudo access. You must enable a passwordless SSH connection between the Informatica domain and the gateway host for the gateway user.

- Update the sudoers file to configure sudo privileges to the ICS gateway user.

- Enable the Informatica Cluster Service if it was an upgrade in an Internal Cluster. If it was upgraded from an External Cluster, create and enable the Informatica Cluster Service. When you create the Informatica Cluster Service, you must use the license for the 10.5.x version of Informatica Cluster Service. And then associate Informatica Cluster Service with the Catalog Service.

- Enable the catalog service. Enable the email service if you had configured the service for the Catalog Service. Upgrade the Catalog Service. We will see the below warning in the catalog service once the upgrade is a success.

Migrate the Backed-Up Catalog Content.

After the catalog service upgrade, Use the infacmd.sh LDM migrateContents command to migrate the data that you backed up using the export utility. Migrate content prepares the 10.4 catalog content compatible with 10.5.x. Hence, we are running this command after upgrading the Catalog. Refer to this document for more information.

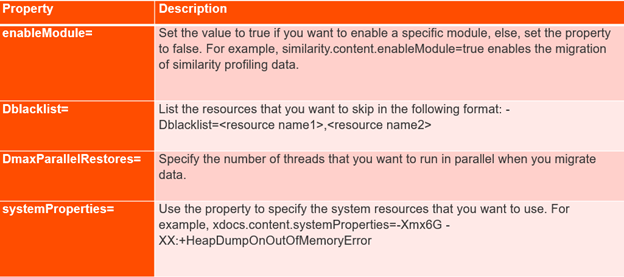

Performance Parameters for Migrate Content

If you want to tune the performance parameters for migrating data or skipping failed resources, modify the parameters specified in the MigrationModuleConfigurations.properties file available at the following location: <INFA HOME>/services/CatalogService/Binaries.

Medium Load

Default Memory Configuration is sufficient for Catalog's Restore process with 20 Mill Assets with 32GB Domain Machine. If Domain Node is shared across multiple applications (DPM, EDP, EDC), then Max Heap Memory of export.jar process can be limit by updating Migration Module Configuration Property under

$INFA_HOME/services/CatalogService/Binaries/MigrationModuleConfigurations.properties

Set xdocs.content.systemProperties=-Xmx10G

High Load

Default Memory Configuration is sufficient for Catalog's Restore process with 50 Mill Assets with 64GB Domain Machine. If Domain Node is shared across multiple applications (DPM, EDP, EDC), then Max Heap Memory of export.jar process can be limit by updating Migration Module Configuration Property under

$INFA_HOME/services/CatalogService/Binaries/MigrationModuleConfigurations.properties

Set xdocs.content.systemProperties=-Xmx15G

For Similarity Content Restore

It is recommended to set a minimum of 4 GB heap under infacmd.sh file. Need to set default maximum java heap memory allocation pool to 4096m and initial java heap memory allocation pool to 64

Under infacmd.sh update → ICMD_JAVA_OPTS="-Xms64m -Xmx4096m"

- Verify the Migrated Content: You can use the infacmd migrateContents -verify command as shown in the following sample to verify the migrated content: ./infacmd.sh LDM migrateContents -un Administrator -pd Administrator -dn Domain -sn CS -id /data/Installer1050/properties/utils/upgrade/EDC/export -verify.

- Remove the Custom Properties for the Catalog Service related to the Hadoop cluster.

The following are the Post Upgrade Tasks:

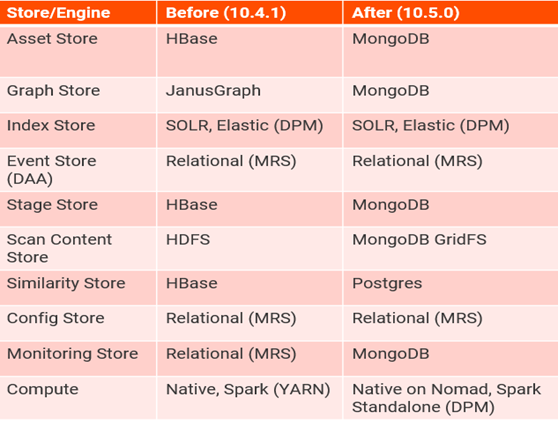

Q1. What are the changes included in 10.5 that I should be aware of?

A. In 10.5, the embedded cluster components previously shipped with EDC (Hortonworks Data Platform) are replaced by a different technology stack. The details are as follows. The set of components will be known as the Informatica Cluster Services.

Q2. Why are we making the architecture change from Hadoop to Nomad?

A. HDP being planned to be EOL end of 2021, there is an opportunity to rethink EDC's underlying component. With Hadoop getting less adoption in the market than a few years back, it makes sense to choose newer technologies that will be better supported/maintained in the future.

Q3. What is the support model for the component replacing the Hortonworks Data Platform (HDP)?

A. EDC will provide full support for the deployment and maintenance of the component shipped with EDC 10.5. This includes:

- MongoDB

- Nomad & MTLS

- SolR

- PostgreSQL

Q4. Will EDC 10.5 continue to support deployment on the external cluster?

A. No, all new customers will have to provide the necessary hardware and deploy the customers who are running with external cluster will be invited to migrate to the embedded cluster deployment model as they are upgrading to 10.5. Communication will be sent to customers who use an external cluster to help plan 10.5 adoptions before the end of 2020.

Q5. What will be the upgrade process from the version before 10.5 to 10.5?

A. For customers using the embedded cluster today, the upgrade will be like the initial upgrade: backup the content, clean up the cluster nodes (using provided scripts), upgrade to 10.5, deploy new service components, restore catalog content, and upgrade the content. For customers using the external cluster, cleanup of the cluster nodes will not be necessary.

Q6. Should Informatica-related environment variables be unset before upgrading?

A. Yes, it is recommended to unset all the environment variables related to Informatica before the upgrade.

Q7. If we Skip configuring the Advanced Scanner repository while Upgrade/Installation 10.5, can we install the Advanced scanner later?

A. Yes, we can install it later. Refer to this document for more information.

Q8. Do we need to install postgre SQL DB on the cluster machine, and what version?

A. No, postgre SQL is bundled with Informatica installer.

Q9. Can we use the root user for installing the 10.5 EDC domain?

A. No, we need to use a non-root user for the EDC installation. If we use the root user, it will fail to install the postgre SQL, which is a requirement from the postgre side.

Q10. Do we need to install MongoDB on the cluster machine?

A. No, MongoDB is bundled with Informatica installer.

Q11. Is it mandatory to rename ScannerBinaries_[OStype].zip?

A. Yes, it is mandatory to rename the binaries files as below.

Rename ScannerBinaries_[OStype].zip to ScannerBinaries.zip. For example, rename ScannerBinaries_RHEL.zip to ScannerBinaries.zip.

Q12. How to use Technical Preview Features in EDC?

A. Contact Informatica Shipping Team to obtain Technical Preview License to use Technical Preview Features in EDC.

Q13. Where can I see the advanced scanner option in Domain?

A. The advance scanner is not a Domain service, and the advanced scanner URL is a separate webapp that you can access with the <domain host>:< MDX port>, provided during installation.

Q14. How do you proceed when after Installing the Advanced scanner as part of EDC installation, it throws an error "No License available" in the Advanced scanner UI?

A. Post accessing the URL, you need to set global variables in the Advanced Scanner URL with a set of global variables pointing to EDC URL, Username, and Password and restart Metadex Server. Please refer to this document for more information.

Q15. Where Can I find the advanced scanner Binaries?

A.

- Advanced Scanner binaries are present in the location: <INFA_HOME>/services/CatalogService/AdvancedScannersApplication/app

Q16. How to restart the Advanced Scanner?

A.

- To Stop Advanced Scanner: <INFA_HOME>/services/CatalogService/AdvancedScannersApplication/app/server.sh stop

- To Start Advanced Scanner:<INFA_HOME>/services/CatalogService/AdvancedScannersApplication/app/server.sh &

Q17. Where can I find the logs for the services such as MongoDB, Apache Solr, ZooKeeper, Nomad, and PostgreSQL?

A. The log files are present in the following directory by default: /opt/Informatica/ics. If you configured a custom directory for the services, the log files are present in the /ics directory in the custom directory.

Q18. Will there be Kerberos in the Nomad cluster?

A. No Kerberos, security will be handled through another mechanism, mTLSauth and encryption implemented.

Q19. What is the use of the ICS Status File?

A. ICS status file is created in /etc/infastatus.conf, including Domain, namd, and ics cluster service name. It's created on all cluster nodes and is used for ICS to ensure other ICS does not share the cluster node.

Q20. Can we add/delete processing/data nodes?

A. Only processing nodes (nomad client node) can be deleted/added. Data node is not allowed to change, even incremented.

Q21. What if the user wants to add/delete the data node?

A. Workaround: Back up data, clean cluster, and create a new ICS with a new number of data nodes. For more FAQs, please refer to the following link.

Catalog Backup and Restore failure scenarios:

ERROR 1:

ICMD_10033] Command [BackupData] failed with error [[BackupRestoreClient_10002] Following error occurred while taking backup of data : [BackupRestoreClient_00027] Snapshot with name [ldmCluster] in HDFS snapshot directory [/Informatica/LDM/ldmCluster] already exists.

Cause & Solution:

The error occurred due to an external snapshot of the Catalog for Enterprise Data Catalog deployed in an internal cluster. Run the removesnapshot command to remove the external snapshot, and then run the BackupContents command to create a snapshot.

ERROR 2:

org.apache.hadoop.ipc.RemoteException(java.io.IOException):File/tmp/cat_ihs_backup/scanner/MRS_INFACMD_10.zip could only be replicated to 0 nodes instead of minReplication (=1). There are 1 datanode(s) running and no node(s) are excluded in this operation.

Cause & Solution:

You might encounter this error while restoring HDFS data if there is insufficient free disk space in the machine where data must be restored. Verify that the machine where HDFS data is restored has sufficient free disk space.

ERROR 3:

java.util.concurrent.ExecutionException: java.lang.OutOfMemoryError: GC overhead limit exceeded

Cause & Solution:

You might encounter this error when you restore the similarity store backup and the default Java heap size of 512 MB is configured. Set the ICMD_JAVA_OPTS environment variable to -Xmx4096m to increase the heap size to 4096 MB.

ERROR 4:

When you migrate the backed-up catalog data, the following error message appears in the Migration.log file:

Error from server at https://invrh84edc004.informatica.com:8983/solr/collection1:

Error from server at null: Could not parse response with encoding ISO-8859 1org.apache.solr.client.solrj.impl.HttpSolrClient$RemoteSolrException: Error from server at https://invrh84edc004.informatica.com:8983/solr/collection1:

Error from server at null: Could not parse response with encoding ISO-8859-1

Solution:

Verify that ntpd is synchronized between the Informatica domain node and the cluster nodes to resolve the issue.

ERROR 5:

Error while running the backup command for Catalog: Backup file validation failed. Error: [java.lang.Exception: The .zip file: [/opt/informatica/backup/backup.zip] does not contain a backup of the following stores: [SEARCH]. Provide a backup file that contains the backup of all the stores: [SEARCH,ASSET,ORCHESTRATION,SIMILARITY]]

Solution:

If you deployed a cluster on a multi-node environment, you must perform the following steps before taking the catalog backup:

- Add the Shared File Path property in the Informatica Cluster Service configuration.

- Restart the Informatica Cluster Service.

- Restart the Catalog Service.

- Re-index the Catalog.

This NFS directory is used only when taking LDM backup in a multi-node environment. The NFS path will be empty, and it will populate only when the backup is taken. And on completion, it will delete the files from the shared file system directory.

Verify the following directory prerequisites:

- The directory must be empty.

- The directory must have the NFS file system mounted.

- The username to access the directory must be the same in all cluster nodes.

- The user configured to access the directory must be a non-root user.

For more information, refer to the following KBs:

FAQ: Is it mandatory to have Cluster Shared File System for ICS service in EDC 10.5?

ERROR 6:

After you enable the Catalog Service, the Catalog Administrator tool is not accessible

Solution:

To resolve the issue, delete the following directories:

<Informatica installation directory>/tomcat/temp/<Catalog Service name>

<Informatica installation directory>/services/CatalogService/ldmadmin

<Informatica installation directory>/services/CatalogService/ldmcatalog

ERROR 7:

The Informatica Cluster Service fails to start

Solution:

To resolve the issue, open the sshd_config file from the following directory:

/etc/ssh

.Change the value of the MaxSessions parameter to 60 and the MaxStartups parameter to 1024, and then restart the SSH daemon service.

ERROR 8:

The Catalog Service fails with the following error message: "unable to create INITIAL extent for segment in tablespace."

Solution:

To resolve the issue, increase the size of the tablespace for the Model Repository Service database.

Here are the additional resources:

- Refer to What's New and Changed guide v10.5.2 to learn about new functionality and changes for current and recent product releases.

- Refer to the Release Notes v10.5.1 to learn about known limitations and fixes associated with version 10.5.1. The Release Notes also include information about upgrade paths, EBFs, and limited support, such as technical previews or deferments.

- Refer to Installation for EDC guide v10.5.2 to install EDC v10.5.2.

- Refer to Upgrade Guide v10.5.1 to know the upgrade steps.

- Refer to the article HOW TO: Validate the Resources and assets present in catalog export backup taken for EDC upgrade

Product Feature

Configure EDC Metadex Scanner with Microsoft SSIS, SSRS and SQL Server Stored Procedures

Dec 06, 2022

Dec 06, 2022

8:00 AM PST

8:00 AM PST

Product Feature

Advanced Scanners: Architecture, Installation, Capabilities and Updates

Jun 28, 2022

Jun 28, 2022

8:00 AM PST

8:00 AM PST

Product Feature

Data Quality Reporting Framework using Axon and DEQ

Jun 21, 2022

Jun 21, 2022

8:00 AM PST

8:00 AM PST

Product Feature

Axon - Adding, Updating and Deleting Data using API Calls

Jun 14, 2022

Jun 14, 2022

8:00 AM PST

8:00 AM PST

Product Feature

Calculating the Value of Data in your Enterprise

Jun 07, 2022

Jun 07, 2022

8:00 AM PST

8:00 AM PST

Product Overview

EDC 10.5.x Advanced Scanner Overview and Best Practices

May 17, 2022

May 17, 2022

8:00 AM PST

8:00 AM PST

Product Architecture

Enterprise Data Catalog 10.5.x Architecture

Mar 22, 2022

Mar 22, 2022

8:00 AM PST

8:00 AM PST

Best Practices

Enterprise Data Catalog – REST API Use Case Solutions

Mar 01, 2022

Mar 01, 2022

8:00 AM PST

8:00 AM PST